-

Články

- Vzdělávání

- Časopisy

Top články

Nové číslo

- Témata

- Kongresy

- Videa

- Podcasty

Nové podcasty

Reklama- Kariéra

Doporučené pozice

Reklama- Praxe

Strategies for Increasing Recruitment to Randomised Controlled Trials: Systematic Review

Background:

Recruitment of participants into randomised controlled trials (RCTs) is critical for successful trial conduct. Although there have been two previous systematic reviews on related topics, the results (which identified specific interventions) were inconclusive and not generalizable. The aim of our study was to evaluate the relative effectiveness of recruitment strategies for participation in RCTs.Methods and Findings:

A systematic review, using the PRISMA guideline for reporting of systematic reviews, that compared methods of recruiting individual study participants into an actual or mock RCT were included. We searched MEDLINE, Embase, The Cochrane Library, and reference lists of relevant studies. From over 16,000 titles or abstracts reviewed, 396 papers were retrieved and 37 studies were included, in which 18,812 of at least 59,354 people approached agreed to participate in a clinical RCT. Recruitment strategies were broadly divided into four groups: novel trial designs (eight studies), recruiter differences (eight studies), incentives (two studies), and provision of trial information (19 studies). Strategies that increased people's awareness of the health problem being studied (e.g., an interactive computer program [relative risk (RR) 1.48, 95% confidence interval (CI) 1.00–2.18], attendance at an education session [RR 1.14, 95% CI 1.01–1.28], addition of a health questionnaire [RR 1.37, 95% CI 1.14–1.66]), or a video about the health condition (RR 1.75, 95% CI 1.11–2.74), and also monetary incentives (RR1.39, 95% CI 1.13–1.64 to RR 1.53, 95% CI 1.28–1.84) improved recruitment. Increasing patients' understanding of the trial process, recruiter differences, and various methods of randomisation and consent design did not show a difference in recruitment. Consent rates were also higher for nonblinded trial design, but differential loss to follow up between groups may jeopardise the study findings. The study's main limitation was the necessity of modifying the search strategy with subsequent search updates because of changes in MEDLINE definitions. The abstracts of previous versions of this systematic review were published in 2002 and 2007.Conclusion:

Recruitment strategies that focus on increasing potential participants' awareness of the health problem being studied, its potential impact on their health, and their engagement in the learning process appeared to increase recruitment to clinical studies. Further trials of recruitment strategies that target engaging participants to increase their awareness of the health problems being studied and the potential impact on their health may confirm this hypothesis.

: Please see later in the article for the Editors' Summary

Published in the journal: . PLoS Med 7(11): e32767. doi:10.1371/journal.pmed.1000368

Category: Research Article

doi: https://doi.org/10.1371/journal.pmed.1000368Summary

Background:

Recruitment of participants into randomised controlled trials (RCTs) is critical for successful trial conduct. Although there have been two previous systematic reviews on related topics, the results (which identified specific interventions) were inconclusive and not generalizable. The aim of our study was to evaluate the relative effectiveness of recruitment strategies for participation in RCTs.Methods and Findings:

A systematic review, using the PRISMA guideline for reporting of systematic reviews, that compared methods of recruiting individual study participants into an actual or mock RCT were included. We searched MEDLINE, Embase, The Cochrane Library, and reference lists of relevant studies. From over 16,000 titles or abstracts reviewed, 396 papers were retrieved and 37 studies were included, in which 18,812 of at least 59,354 people approached agreed to participate in a clinical RCT. Recruitment strategies were broadly divided into four groups: novel trial designs (eight studies), recruiter differences (eight studies), incentives (two studies), and provision of trial information (19 studies). Strategies that increased people's awareness of the health problem being studied (e.g., an interactive computer program [relative risk (RR) 1.48, 95% confidence interval (CI) 1.00–2.18], attendance at an education session [RR 1.14, 95% CI 1.01–1.28], addition of a health questionnaire [RR 1.37, 95% CI 1.14–1.66]), or a video about the health condition (RR 1.75, 95% CI 1.11–2.74), and also monetary incentives (RR1.39, 95% CI 1.13–1.64 to RR 1.53, 95% CI 1.28–1.84) improved recruitment. Increasing patients' understanding of the trial process, recruiter differences, and various methods of randomisation and consent design did not show a difference in recruitment. Consent rates were also higher for nonblinded trial design, but differential loss to follow up between groups may jeopardise the study findings. The study's main limitation was the necessity of modifying the search strategy with subsequent search updates because of changes in MEDLINE definitions. The abstracts of previous versions of this systematic review were published in 2002 and 2007.Conclusion:

Recruitment strategies that focus on increasing potential participants' awareness of the health problem being studied, its potential impact on their health, and their engagement in the learning process appeared to increase recruitment to clinical studies. Further trials of recruitment strategies that target engaging participants to increase their awareness of the health problems being studied and the potential impact on their health may confirm this hypothesis.

: Please see later in the article for the Editors' SummaryIntroduction

The randomised controlled trial (RCT) provides the most reliable evidence for evaluating the effects of health care interventions [1],[2], but the successful conduct of clinical RCTs is often hindered by recruitment difficulties [3]. Inadequate recruitment reduces the power of studies to detect significant intervention effects [4], causes delays (which may affect the generalizability of the study if standard care changes over time), increases costs, and can lead to failure to complete trials [5],[6]. With increasing reliance on clinical RCT findings for clinical and regulatory decision making, the success of future RCTs depends on employing effective and efficient methods for recruiting study participants [7].

Historically recruitment of participants for RCTs has been by “trial and error” [8], by using a number of different strategies and modifying strategies according to the observed effects on recruitment. More recently, novel strategies have been developed to facilitate adequate and timely recruitment [3],[4]. Although there have been two previous systematic reviews on strategies to enhance recruitment to research [9],[10], they identified specific individual interventions. However, these interventions could not be combined to offer useful general advice for recruitment for clinical RCTs.

The aim of this study was to identify effective recruitment strategies for clinical RCTs by systematically reviewing randomised studies that compare consent rates, or other methods of measuring consent for two or more recruitment methods used, to approach potential RCT participants for trial participation (these studies are termed recruitment trials).

Methods

A protocol for this systematic review had not been registered before the review commenced, although the abstracts of previous versions of this systematic review were published in 2002 (International Clinical Trials Symposium: improving health care in the new millennium) [11] and 2007 (3rd International Clinical Trials Symposium) [12] (Text S1).

Selection Criteria

All randomised and quasi-randomised studies that compared two or more methods of recruiting study participants to a real phase III RCT or mock RCT (where no actual trial occurred) were included. Studies that assessed recruitment to observational studies, questionnaires, health promotional activities, and other health care interventions and nonrandomised studies of recruitment strategies were excluded. Where more than one publication of the same study existed, the publication with the most complete data was included.

Literature Search

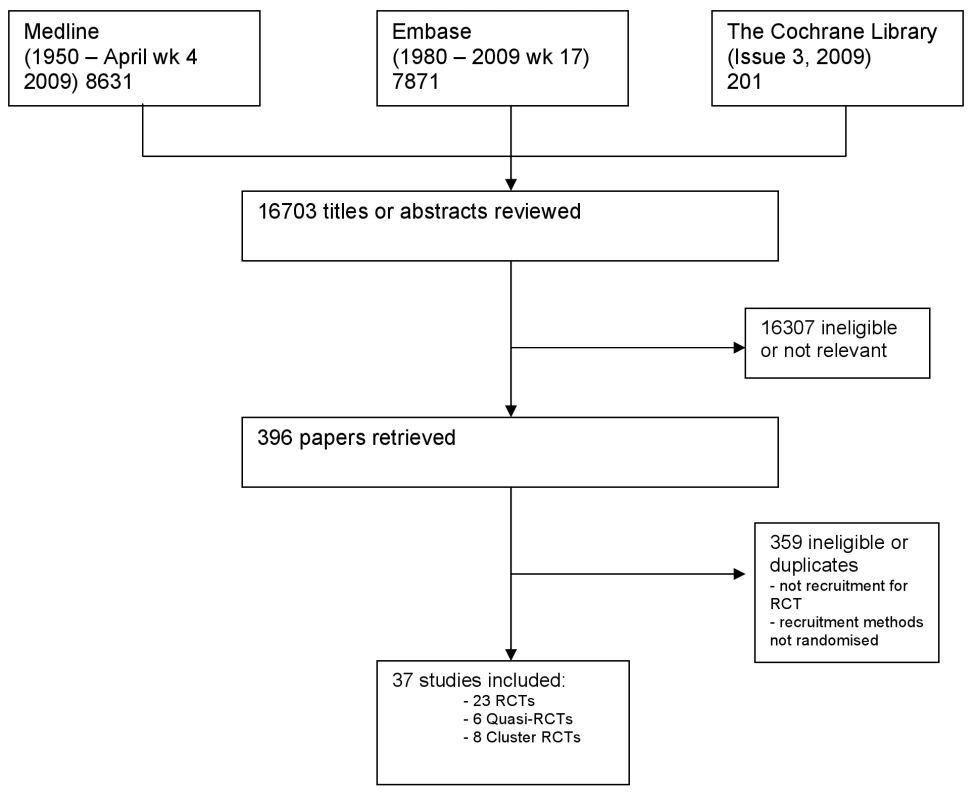

Studies were identified from MEDLINE (1950 to April, week 4, 2009), Embase (1980 to week 17, 2009), and The Cochrane Library (Cochrane Library, issue 3, 2009) (Figure 1). The MEDLINE and Embase databases were searched using text words and subject headings (with unlimited truncations) for “recruitment,” “enrolment,” and “accrual” combined with “random” and “trials” and “participate” or “consent” or “recruit” with unlimited truncations. The Cochrane Library was searched using “recruitment” combined with “random and trial,” and “consent or accrual.” The search strategy changed slightly with time as a result of changes in MEDLINE Mesh heading definitions. Reference lists of relevant studies were also searched and non-English language papers were translated. Two of three reviewers (PHYC, AT, or SH) independently screened each study title and abstract for eligibility, retrieved full text articles of all potentially relevant studies, and extracted data from the retrieved papers using a form that was designed by the authors. Disagreements were resolved by discussion with a third reviewer (JCC).

Data Extraction

Data were extracted without blinding to authorship, on the recruitment methods evaluated, the population setting, and the trial design, as well as risk of bias items such as randomisation, allocation concealment, blinding of outcome assessors, loss to follow up, and intention-to-treat analysis. These elements were each assessed separately using the method developed by the Cochrane Collaboration [13].

Outcomes Assessed

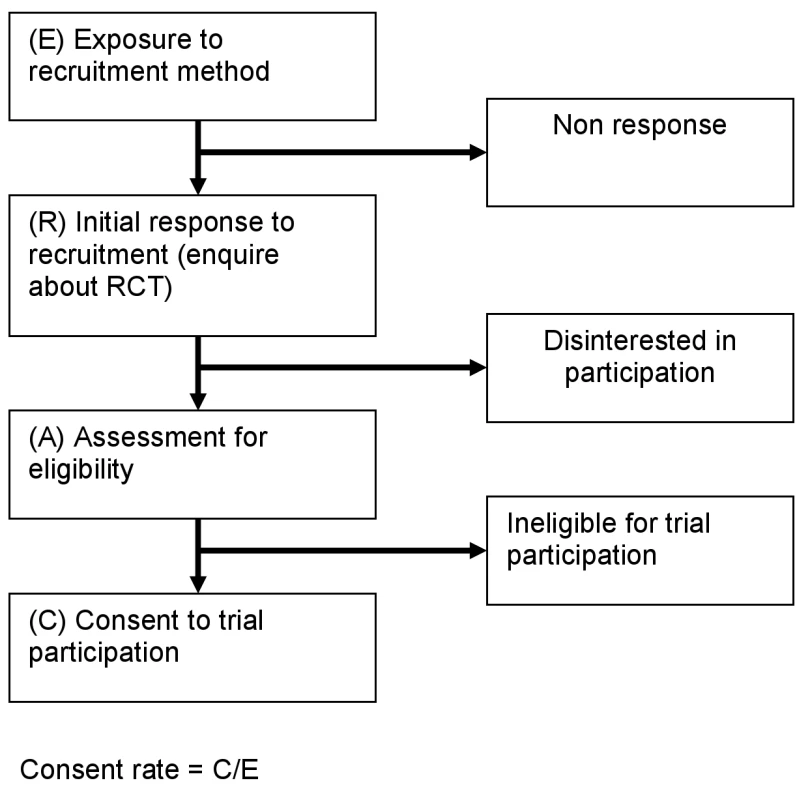

The primary outcome of interest was consent rates for the different recruitment strategies. Because studies differed in definitions of consent rates, where possible we recalculated the consent rate of each recruitment method by dividing the number of participants exposed to the recruitment method who actually consented for clinical study participation by the total number of potential participants exposed to that method (see Figure 2). For studies where information was insufficient to calculate consent rates, other measures of consent success described in the study were reported. For mock trials, willingness to consent to participate (i.e., potential participants acknowledging that they would be willing to participate in the trial or willingness to be contacted for participation in future trials) was the outcome measure. Consent rates and other outcome measures were compared using intention-to-treat analysis.

Fig. 2. Consent rate for RCTs.

Statistical Methods

Where possible we used relative risk (RR) and their 95% confidence intervals (CIs) to describe the effects of different strategies in individual recruitment trials. Where more than two strategies were used in a single recruitment trial, the numerator and denominator from the standard (control) recruitment strategy was divided by the number of intervention strategies for each comparison so that the control numbers would not be overrepresented [13].

Results

Literature Search

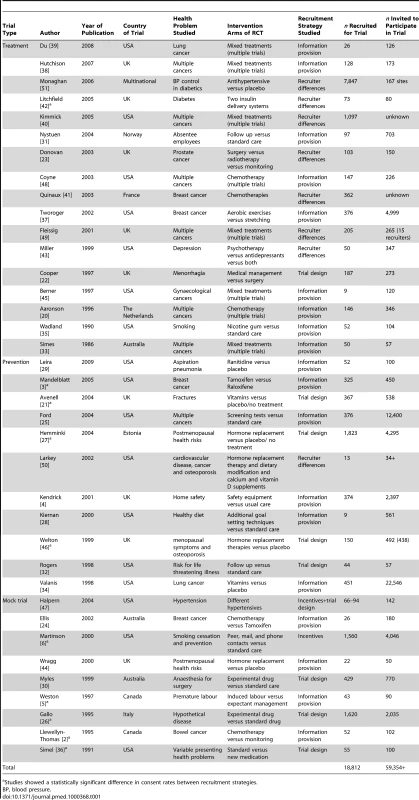

From 16,703 unique titles and abstracts, 396 articles were retrieved and 37 eligible publications identified (Figure 1). Collectively this total assessed recruitment outcomes in at least 59,354 people who were approached for clinical study participation, of whom 18,812 consented to participate (Table 1). (Not all studies identified the number of potential participants who were approached).

Tab. 1. Included studies.

Studies showed a statistically significant difference in consent rates between recruitment strategies. Quality of Included Studies

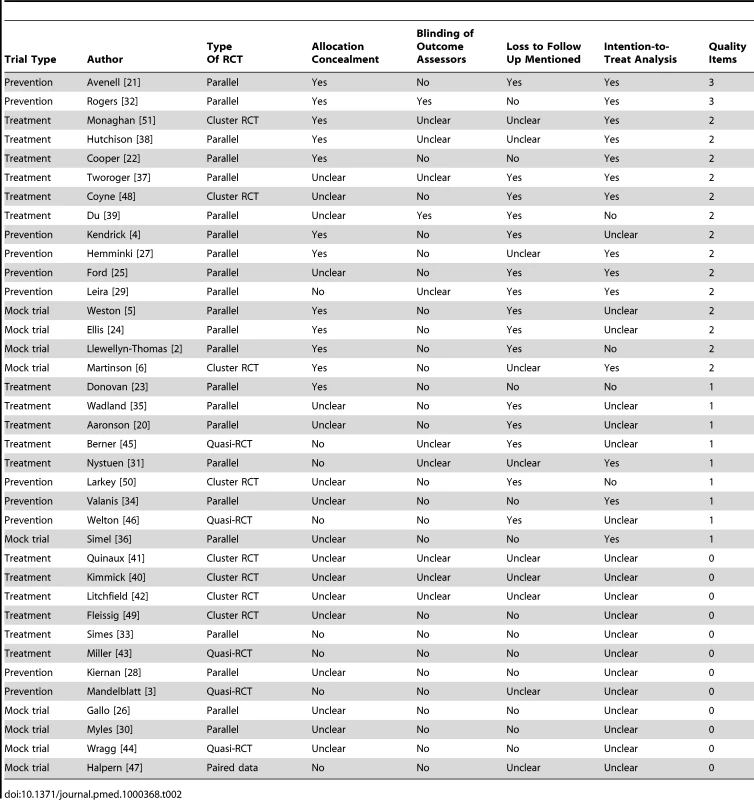

There were 23 parallel group RCTs, six quasi-RCTs (including one using paired data), and eight cluster RCTs. Of the 37 included recruitment trials, only 12 studies (32%) had clear allocation concealment, two (4%) specified blinding of outcome assessors (no study had blinding of participants as this would have been difficult to achieve), 15 (40%) recorded loss to follow-up information, and 14 (38%) used intention-to-treat analysis (see Table 2).

Tab. 2. Quality of included studies.

Characteristics of Included Studies

Of the 37 included studies, 17 assessed treatment comparisons, 11 were prevention studies, and nine mock studies (where participants declared their willingness to participate in a trial but no actual trial occurred).

There were 66 different types of recruitment strategies that were broadly categorised into four groups: novel trial designs (nine studies), recruiter differences (eight studies), incentives (two studies), and provision of trial information (19 studies), with one study looking at both novel trial design and incentives [14]. Standard recruitment is defined as when the investigator invites the potential participant to enrol in the study and treatment allocation is randomly assigned after consent has been given, with routine treatment being provided where consent is not given.

Types of Recruitment Strategies Studied

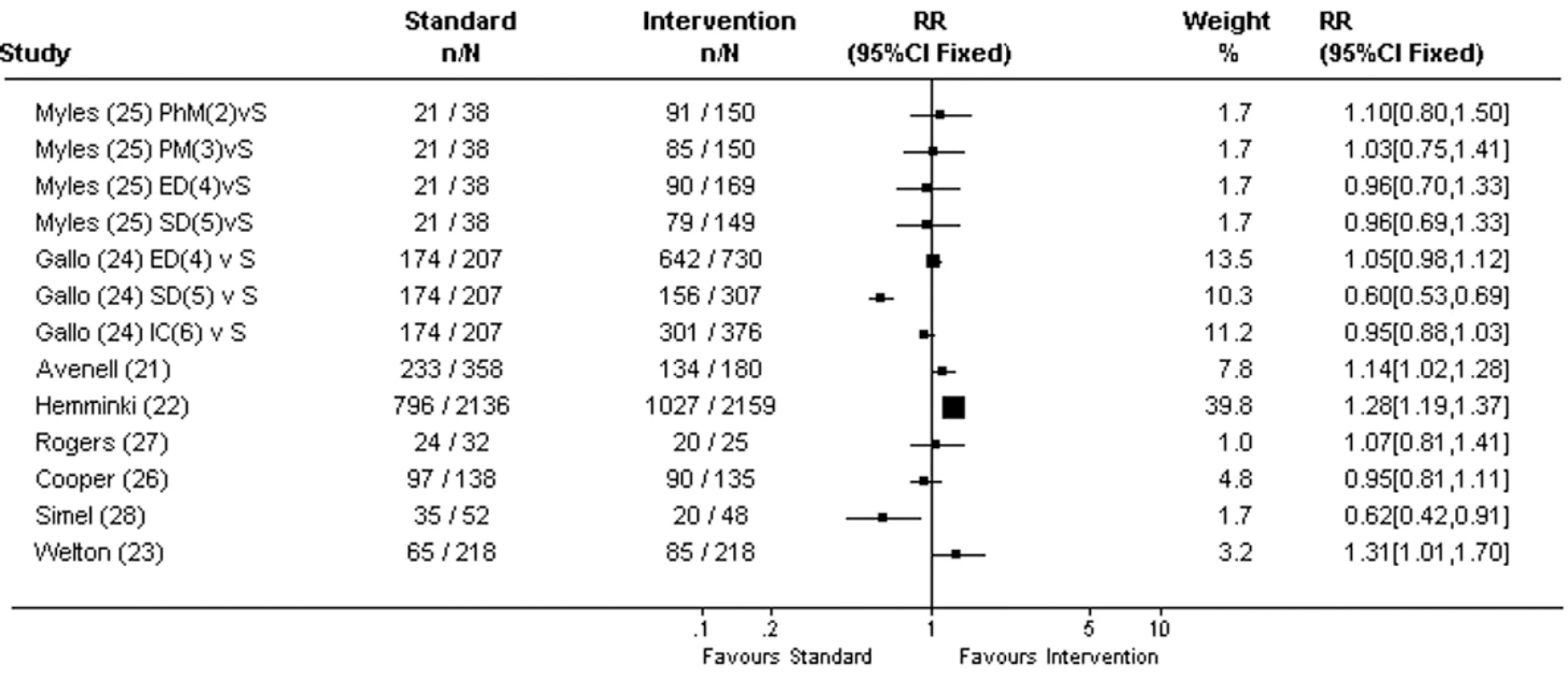

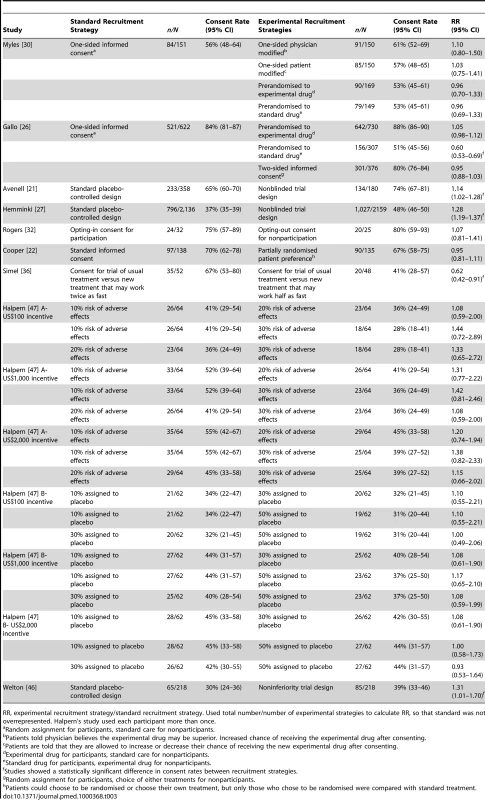

Novel trial designs

Avenell and Hemminki [15],[16] compared a standard placebo-controlled design with a nonblinded trial design (both for prevention studies) (see Figure 3 and Table 3). In the nonblinded trial design arm, randomisation occurred before participants were approached, and participants were informed of the treatment they were randomised to receive prior to giving consent. Consent rates were higher for the nonblinded trial design compared with standard trial design where randomisation occurred after consent for trial participation (RR 1.14, 95% CI 1.02–1.28 and RR 1.28, 95% CI 1.19–1.37, respectively) [15],[16]. Welton [17] compared a noninferiority clinical study (where both arms of the trial had an active treatment) with a placebo-controlled study of hormone replacement for postmenopausal women. Willingness to enrol in the clinical study appeared to be higher for the noninferiority study compared with the placebo-controlled study, although results were only just statistically significant (39% versus 30%, RR 1.31, 95% CI 1.01–1.70).

Fig. 3. Consent rates for novel trial designs.

RR, intervention recruitment strategy/standard recruitment strategy. Used total number/number of intervention strategies to calculate RR, so that the number of patients on standard strategies were not overrepresented; S, random assignment for participants, standard care for nonparticipants; 2, patients are told physician believes the experimental drug may be superior. Increased chance of receiving the experimental drug after consenting; 3, patients are told that they are allowed to increase or decrease their chance of receiving the new experimental drug after consenting; 4, experimental drug for participants, standard care for nonparticipants; 5, standard drug for participants, experimental drug for nonparticipants; 6, random assignment for participants, choice of either treatments for nonparticipants. Tab. 3. Studies of novel trial designs.

RR, experimental recruitment strategy/standard recruitment strategy. Used total number/number of experimental strategies to calculate RR, so that standard was not overrepresented. Halpern's study used each participant more than once. Gallo and Myles (both for mock studies) compared standard randomisation (random assignment for all participants and standard care for nonparticipants) with different types of randomisation designs [18],[19]. Strategies included increasing or decreasing the chance of receiving the experimental treatment; experimental treatment for all participants and standard treatment for nonparticipants (where potential participants are informed that they have been randomised to receive the experimental treatment, but if they do not consent, they would receive the standard treatment); standard care for all participants and experimental treatment for nonparticipants (where potential participants are informed that they have been randomised to receive the standard treatment, but if they do not consent, they would receive the experimental treatment); and random assignment of treatment for participants and choice of treatment for nonparticipants. The only randomisation strategy that influenced consent was the “prerandomisation to standard drug” (standard care for all participants and experimental treatment for nonparticipants) in Gallo's study [18], which significantly reduced the consent rate compared with standard randomisation (RR 0.60, 95% CI 0.53–0.69) [18]. However, this was not demonstrated in Myles' study [19].

Cooper compared standard consent with partially randomised patient preference where patients could choose to be randomised or choose their own (medical or surgical) treatment [20]. Patients who chose their own treatment were excluded in our analysis, as choice of treatment conflicts with the purposes of random allocation of treatment, and only patients who chose to be randomised were compared with those receiving standard RCT consent (where they were offered the opportunity to participate in a clinical study where treatment was randomly allocated for participants). This study tested whether allowing a patient choice of treatments increased consent for choosing to have their treatment randomised, compared with simply inviting them to participate in a clinical RCT (without mentioning choice of treatment). There was no difference in consent rates between the standard consent and choosing to be randomised (RR 0.95, 95% CI 0.81–1.11).

Rogers compared “opting in” with “opting out” [21] where consent was sought for participation or for nonparticipation, respectively. In the “opting out” arm, consent rate for clinical study participation was calculated as the proportion who did not sign the consent form (for refusing participation). There was no difference in consent rates between the two groups (RR 1.07, 95% CI 0.81–1.41).

Simel compared consenting to a clinical study assessing standard medication versus a new medication that worked twice as fast with a clinical study comparing standard medication with a new medication that worked half as fast as the standard medication [22]. Participants were not informed that this was a mock trial. This study was designed to assess patients' competence and judgement regarding clinical study participation. Not surprisingly, more patients consented to a clinical study comparing the faster new medication than to a clinical study comparing a slower new medication (67% versus 41%, RR 0.62, 95% CI 0.42–0.91), with a more marked difference among those who voluntarily mentioned the medication's speed of action as a factor in their decision regarding clinical study participation, which may reflect better understanding of the trial information.

Halpern [14] used a factorial design to assess willingness to participate in a number of mock trials using paired data from the same individuals with variations in clinical study designs (as well as variation in monetary incentives, which will be discussed later under “incentives”). There were no differences in consent rates statistically.

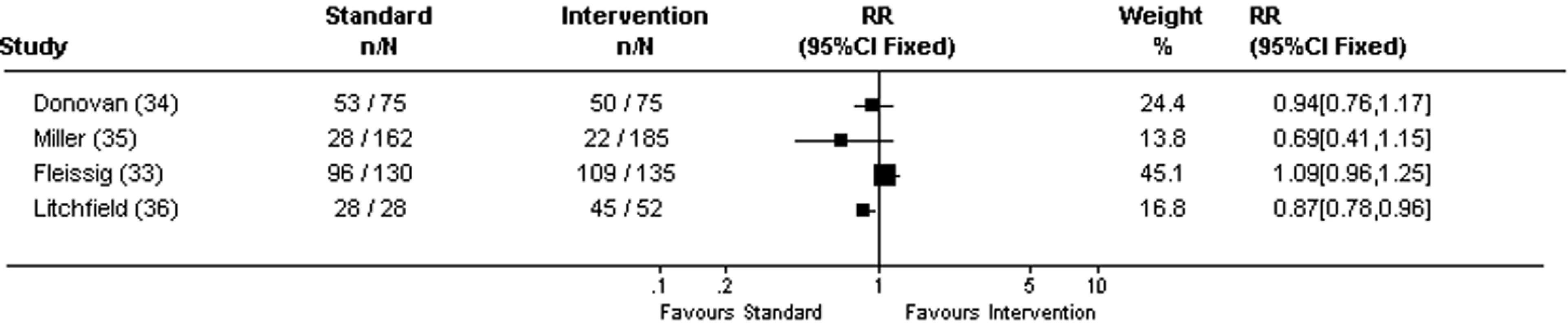

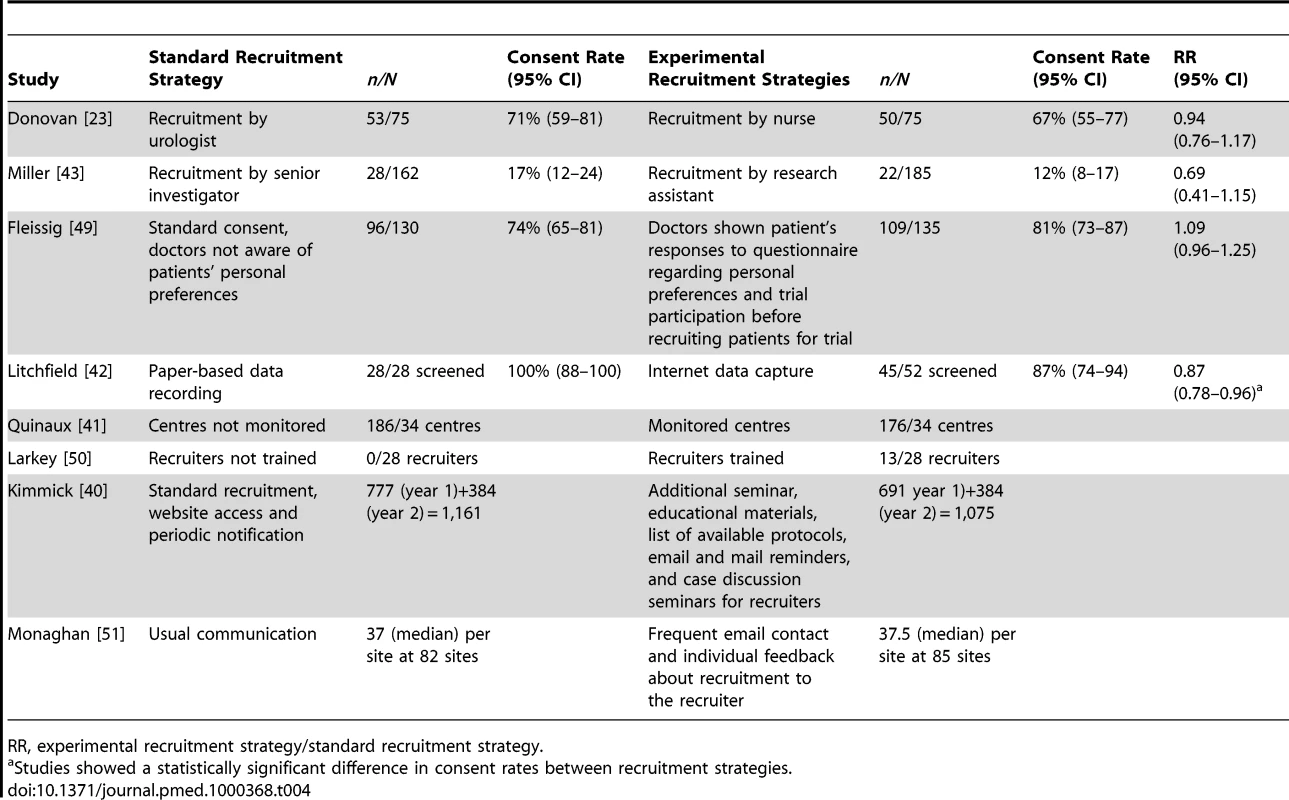

Recruiter differences

Eight recruitment trials compared recruiter differences (see Figure 4 and Table 4). Three cluster RCTs compared different strategies for engaging recruiters (e.g., standard contact versus additional monitoring and contact with recruiters [23]–[25]). Outcome measures were different for each of the studies and therefore results could not be combined. In Quinaux's study, 186 patients from 34 control centres enrolled compared with 176 total patients from 34 monitored centres [23]. In Kimmick's study, 1,161 elderly patients (36% of total patients in first year and 31% in second year) from the control centres enrolled compared with 1,075 (32% in first year and 31% in second year) from the centres who received additional training and contact with investigators [24]. Monaghan's study assessed median number of patients recruited per site with 37.0 patients from the 82 control sites compared with 37.5 patients from the 85 sites with increased contacts with investigators [25]. In all three studies, increased contact with investigators did not statistically increase consent rates, and appeared to actually lower enrolment. One recruitment trial that compared untrained recruiters with training of recruiters [26] found statistically more patients enrolled when the recruiter was trained (28 trained recruiters enrolled 13 patients versus 28 untrained recruiters who enrolled no patients). Fleissig compared standard recruitment with providing recruiters with information about patient preferences [27], with no differences in consent rates between the two methods (RR 1.09, 95% CI 0.96–1.25).

Fig. 4. Consent rates for recruiter differences.

RR, intervention recruitment strategy/standard recruitment strategy. Tab. 4. Studies of recruiter differences.

RR, experimental recruitment strategy/standard recruitment strategy. Donovan and Miller compared recruiter roles (doctor versus nurse RR 0.94, 95% CI 0.76–1.17 [28], and senior investigator versus research assistant RR 0.69, 95% CI 0.41–1.15 [29]). Although there was no difference in consent rates between the recruiters, costs were higher for the more senior person (mean cost of £43.29 versus £36.40 and US$78.48 versus US$50.28 per patient randomised, respectively).

Litchfield compared internet-derived database handling with paper-based database handling [30]. Although proportionately more patients enrolled with the paper-based database, the internet database was more efficient (with shorter time required for data collection and more patients being exposed to the trial). 100% of paper-based database versus 87% internet database groups enrolled (RR 0.87, 95% CI 0.78–0.96), with the internet database being preferable for recruiters.

Incentives

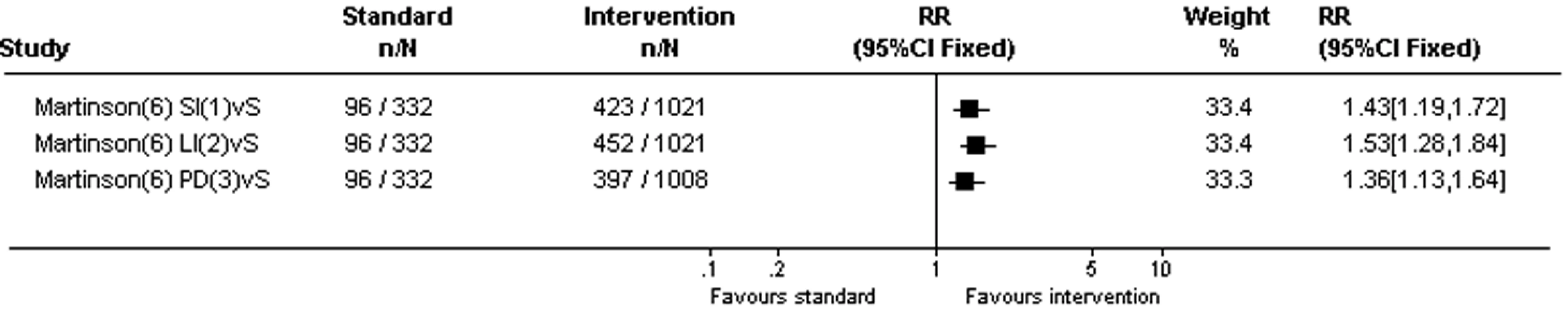

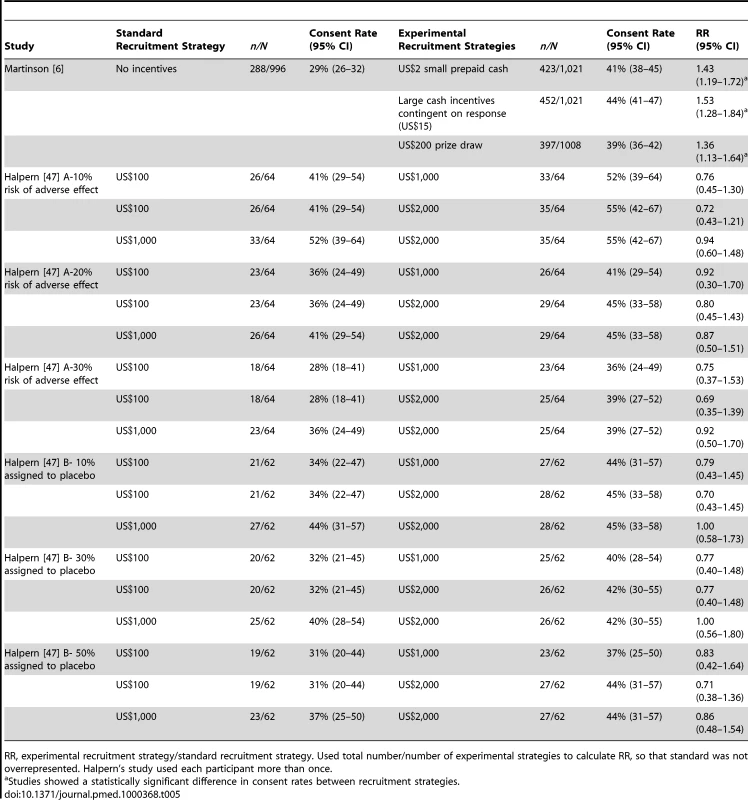

Martinson and Halpern assessed incentives for increasing recruitment (see Figure 5 and Table 5) [14],[31]. In the Martinson study, compared to no incentives, any monetary incentive increased survey response rates and willingness to be contacted regarding a smoking cessation trial. The study did not measure actual recruitment to the clinical study. Consent rate for no incentives was 29% compared with 41% for prepaid US$2 cash incentive (RR 1.43, 95% CI 1.19–1.72); 44% for US$15 cash incentive contingent on completion of survey (RR 1.53, 95% CI 1.28–1.84); and 39% for US$200 prize draw (RR 1.36, 95% CI 1.13–1.64).

Fig. 5. Consent rates for incentives.

RR, intervention recruitment strategy/standard recruitment strategy. Used total number/number of intervention strategies to calculate RR, so that the number of patients on standard strategies were not overrepresented; S, random assignment for participants, standard care for nonparticipants; 1, small incentives (US prepaid cash incentive); 2, larger incentive (US) contingent on response; 3, US0 prize draw. Tab. 5. Studies of incentives.

RR, experimental recruitment strategy/standard recruitment strategy. Used total number/number of experimental strategies to calculate RR, so that standard was not overrepresented. Halpern's study used each participant more than once. The Halpern study assessed the effect of variations in monetary incentives on the willingness to participate in a number of mock clinical studies (of varying trial designs that was mentioned earlier). Patients' willingness to participate increased as the payment level increased from US$100 to US$2,000 irrespective of the risk of adverse effect and risk of being assigned to placebo, although the difference was not statistically significant.

Methods of providing information

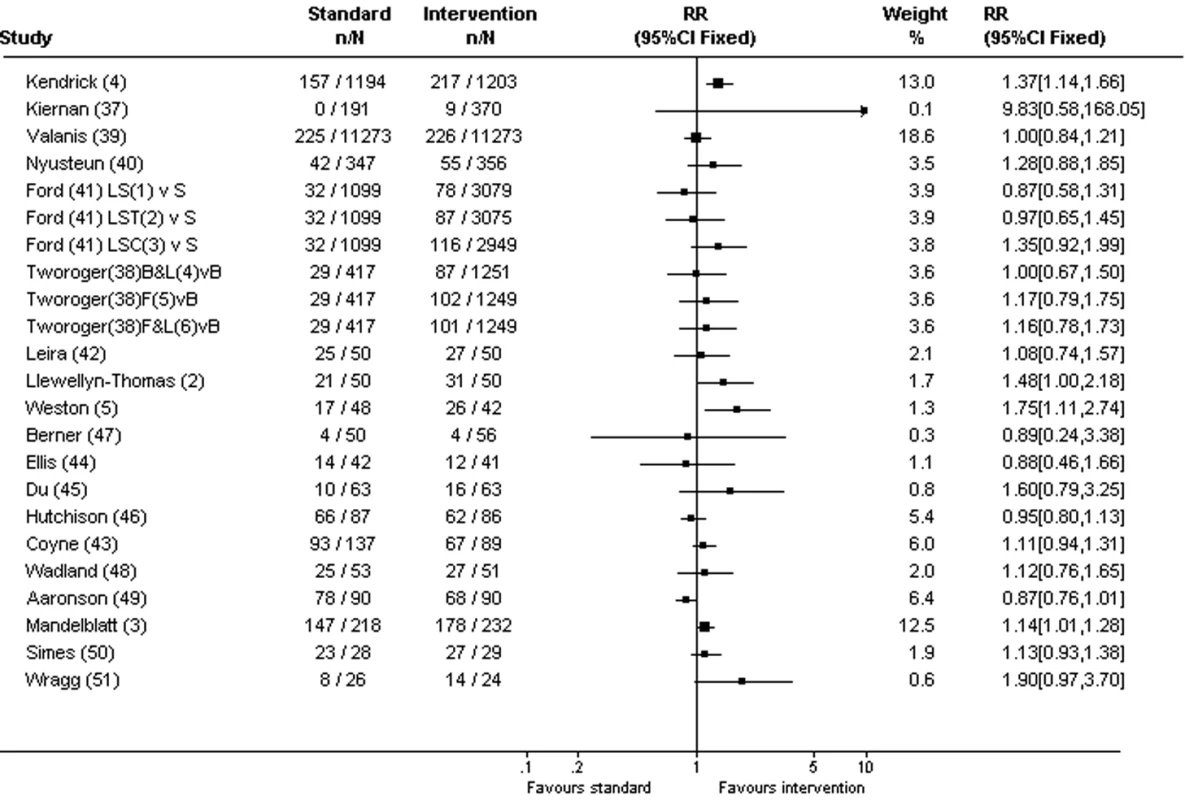

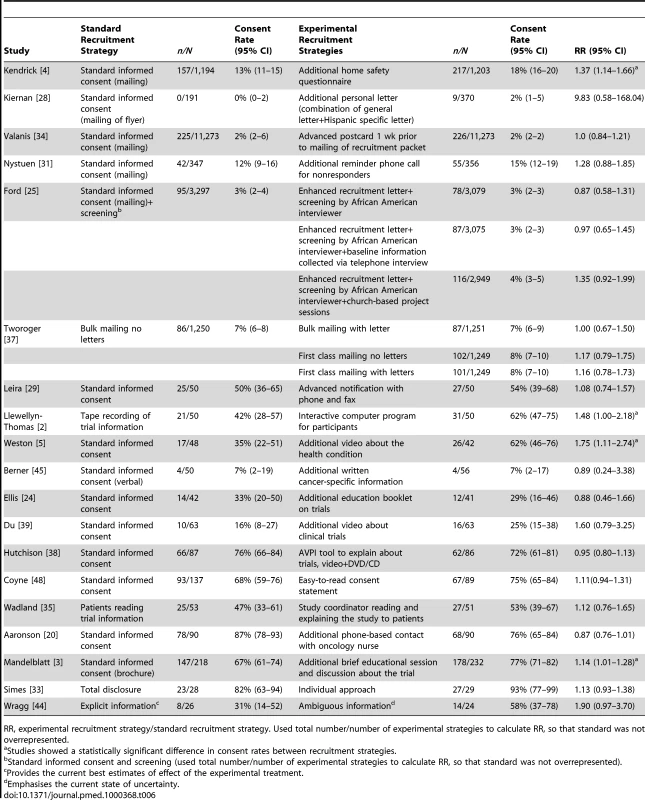

Nineteen recruitment trials compared different methods of providing information to participants, including how the information was presented and what information was provided (see Figure 6 and Table 6).

Fig. 6. Consent rates for methods of providing information.

RR, intervention recruitment strategy/standard recruitment strategy. Used total number/number of intervention strategies to calculate RR, so that the number of patients on standard strategies were not overrepresented; S, standard informed consent; B, bulk mailing; 1, enhanced recruitment letter and screening by African American interviewer; 2, enhanced recruitment letter, screening by African American interviewer and baseline information collected via telephone interview; 3, enhanced recruitment letter, screening by African American interviewer and church-based project sessions; 4, bulk mailing with letter; 5, first-class mailing; 6, first-class mailing with letter. Tab. 6. Studies of methods of providing information.

RR, experimental recruitment strategy/standard recruitment strategy. Used total number/number of experimental strategies to calculate RR, so that standard was not overrepresented. There were six recruitment trials that related to mailing of recruitment material for the clinical study. The methods used to enhance recruitment were the addition of: a questionnaire that focused on the health problem studied (Kendrick [32]); a personal letter inviting participation (Kiernan and Tworoger [33],[34]); use of bulk mailing or first class stamps (Tworoger [34]); an advanced postcard alerting recipients to look for the recruitment packet (Valanis [35]); a reminder phone call for nonresponders of mailed recruitment material (Nystuen [36]); and increasingly intensive interventions (for African Americans), which included a follow-up eligibility-screening phone call, an enhanced recruitment letter featuring a prominent African American man, recruitment by an African American member of the research team, and involvement of church-based project sessions (Ford [37]). Kendrick's addition of the questionnaire that focused on the health problem studied (RR 1.37, 95% CI 1.14–1.66) [32] was the only mailing strategy that increased the consent rate compared with standard mailing of recruitment material. The personal letter [33],[34] using bulk mail or first class mail [34], advanced postcard warning [35], and reminder phone calls [36] did not significantly increase consent rates (see Table 6).

Leira compared standard consent (being invited to participate in the clinical study when the investigators met the patient during helicopter retrievals) with advanced notification of the clinical study with telephone and faxing of informed consent documents prior to arrival of investigators in the helicopter [38]. The intention-to-treat analysis showed no statistical difference between the two recruitment strategies (RR 1.08, 95% CI 0.74–1.57), although 42% of the intervention group did not actually receive the intervention (fax and telephone call) because of technical and logistic reasons. Coyne compared an easy-to-read consent statement with standard consent [39] but showed no significant difference in consent rates (RR 1.11, 95% CI 0.94–1.31).

Three recruitment trials looked at increasing participants' understanding of the clinical trial process, which did not appear to affect recruitment [40]–[42]. Ellis compared standard informed consent with the addition of an educational booklet on clinical trials [40]. There was no difference in consent rates (unadjusted) between the two groups (RR 0.88, 95% CI 0.46–1.66). However, after adjusting for potential confounders (demographic variables, disease variables, preference for involvement in clinical decision making, anxiety, depression, and attitudes to clinical trials), participants receiving the educational booklets were significantly less likely to consent to clinical study participation (OR 0.22, 95% CI 0.04–1.0). Du compared standard care with the addition of a brief video about cancer clinical studies among patients with lung cancer [41]. Consent rates were not statistically different between the two groups. Hutchison compared standard care (where patients discuss clinical care and clinical study participation with the administration of a trial-specific information sheet and consent form) with the addition of an audiovisual patient information tool (with choice of video, CD-Rom, or DVD format), which addressed clinical trial information [42], with no difference in consent rates between the two groups (76% versus 72%, RR 0.95, 95% CI 0.80–1.13).

Three recruitment trials assessed strategies that aim to increase participants' understanding of their underlying condition. Llewellyn-Thomas compared tape recorded reading of clinical study information with an interactive computer program where participants (who were oncology patients receiving radiation therapy) were actively involved in the information search process [43]. The consent rate was higher for participants in the interactive group (RR 1.48, 95% CI 1.00–2.18). Weston compared standard informed consent with the addition of a video explaining trial information and the health problem studied [44]. The consent rate was higher in the video group when initially assessed (RR 1.75, 95% CI 1.11–2.74), but this did not reach statistical significance at 2 wk follow-up (not shown on Table 6). Berner's recruitment trial compared standard care (verbal communication) with the addition of patient information files containing clinical information on cancer specific to the patient [45]. There was no difference in the rate of recruitment to cancer trials in both groups (7% versus 7%, RR 0.89, 95% CI 0.24–3.38), although not all patients were eligible for clinical study enrolment.

Three recruitment trials compared standard consent with additional personal contact with research staff (a study coordinator reading and explaining the clinical study, Wadland [46]; additional phone-based contact with an oncology nurse, Aaronson [47]; and an additional educational session about the disease and risks and benefits of clinical study participation for an oncology prevention study, Mandelblatt [48]). There was no difference in consent rates between standard consent and the study coordinator reading and explaining the clinical study (RR 1.12, 95% CI 0.76–1.65) [46] or additional phone-based contact with the oncology nurse (RR 0.87, 95% CI 0.76–1.01) [47]. However there was higher consent for participants who attended the education session (RR 1.14, 95% CI 1.01–1.28) [48].

There were two recruitment trials assessing framing of recruitment information. In Simes' 1986 trial of recruitment for a cancer treatment study [49], total disclosure of information about the clinical study was compared with an individual approach where doctors informed patients about the clinical study in a manner they thought best. This study assessed both willingness to enrol in the clinical study and actual study participation. There were no differences in actual consent rates between the total disclosure and individual approach groups (RR 1.13, 95% CI 0.93–1.38). However, actual consent rates were higher than the stated willingness to participate in the clinical study (actual consent rates were 82% and 93% in the total disclosure and individual approach groups, respectively, compared with rates of 65% and 88%, respectively, for willingness to participate in the clinical study). Wragg compared framing of recruitment information explicitly (to provide the best current estimates of effect for the experimental treatment) with framing information ambiguously (to emphasise the uncertainty and relative costs and benefits of the experimental treatment) [50]. There was no difference in consent rates between the “ambiguously framed” group and the “explicitly framed” group (RR 1.90, 95% CI 0.97–3.70).

Discussion

Trials of recruitment strategies have evaluated all steps in the recruitment process, including different methods of trial design, randomisation, provision of information, and recruiter differences. In this systematic review, we found that strategies that increased potential participants' awareness of the health problem being studied by engaging them in the learning process significantly increased consent rates (both for “real” and mock trials). These strategies included the addition of a questionnaire that focused on the health problem studied and additional educational sessions, videos, and interactive programs about the diseases studied [32],[43],[44],[48]. Strategies that increased understanding of the clinical trial process (e.g., provision of an educational booklet [40], video [41], or audiovisual patient information tool [42] on clinical trials or provision of an easy-to-read consent statement [39]) showed no evidence of improved recruitment. This finding suggests that it is increased education about the health problem being studied rather than education about the clinical trial process that increased trial participation. There were insufficient data to evaluate whether the effects of the different recruitment strategies were constant across all health conditions, but no there was no clear trend for these strategies to be context specific (see Table 1).

The recruitment trials on how recruitment information was provided (the technique of information presentation, how information was framed, who presented the information, and when the information was presented) did not show a difference between strategies, demonstrating that how or when the information was presented or who presented the information did not influence recruitment, but rather the information provided. A recent study (which was published after completion of our last search update) also showed that publicity about the trial did not increase recruitment [51].

Although a previous observational study showed that framing of recruitment information to emphasise uncertainty enhanced recruitment [52], when this was tested by the rigor of RCT methodology [49],[50], we found that framing did not appear to influence recruitment. Unexpectedly we found that the role of the recruiter also did not show evidence of influencing recruitment (although costs were higher for senior recruiters [28],[29]).

In our review, one recruitment trial identified that a noninferiority clinical study (with active treatment arms) had higher consent rates compared with a placebo-controlled clinical study. This finding is consistent with previous findings that patients preferred “trials with all active arms to placebo-controlled trials” [53]. Also, recruitment trials that compared standard placebo-controlled design with a nonblinded trial design demonstrated that patients were more willing to participate in a clinical study if they knew which treatment they were receiving when consenting, even if the treatment was randomly predetermined. These studies illustrate people's anxieties regarding the unknowns of clinical trial participation. Despite the higher consent rates for the nonblinded trial design, the differential loss to follow up in the two treatments arms of the nonblinded trial is likely to jeopardise validity of the results, as comparison of outcomes between the two treatment groups would be subject to selection bias. For example, patients may be more likely to drop out if they were unhappy with the treatment they were assigned. In the two included studies of nonblinded trial designs, there were higher drop outs in the active treatment arms compared with the placebo arms.

The inclusion of recruitment trials of recruitment to mock clinical studies enabled assessment of recruitment strategies, which for equity reasons would be difficult to compare (such as different randomisation designs, different monetary incentives). Some strategies may be acceptable when used in isolation, but inappropriate when more than one are used within the same clinical study: for example mock trials that tested the hypothesis that potential participants are more willing to participate in a study if they had an increased chance of receiving the experimental treatment is a strategy that has been adopted by many vaccine and other clinical studies in the belief that potential participants are more likely to participate if they believed they had a higher chance of receiving the (desirable) experimental treatment. However, we found that increasing the likelihood of receiving the experimental treatment [19] (or reducing the risk of receiving placebo) [14] did not appear to affect the consent rate, demonstrating that people's decisions for clinical study participation are not influenced by whether they are more or less likely to receive a particular treatment. Other strategies are more controversial: for example, the only consent strategy that appeared to affect the consent rate for a mock trial was “prerandomisation to standard drug” [18], where participants were given the standard drug and nonparticipants were given the experimental drug. Fewer people were willing to consent to this type of clinical study than to a clinical study of standard randomisation for all participants. It is unlikely that such a method could ethically be employed in a real situation. Monetary incentives appeared to increase consent compared to no monetary incentives [31], but the amount of money appeared to be less important [14].

As results of mock clinical studies are based on whether participants are willing to enrol in a clinical study (rather than whether they actually consented), extrapolation to real clinical studies may not be realistic. Stated “willingness to participate” and actual participation may also differ. In the recruitment trial comparing standard consent to the addition of a video explaining clinical trial information and the health problem studied for a mock clinical study, although statistically more participants from the video group were willing to enrol in the clinical study, this number became not statistically significant 2 wk later [44]. Conversely, in Sime's 1986 study [49], more participants actually consented to clinical study participation than had indicated willingness to participate, perhaps reflecting patients' deference to doctors' advice in the 1980s (when there was less emphasis on patient autonomy compared with today). It also showed the influence of the doctor on patient behaviour [53].

Although there have been two previous systematic reviews on strategies to enhance recruitment to research [9],[10], our study is the latest and has a more targeted and rigorous search method. We conducted a more comprehensive search (with inclusion of more databases than Watson's study [10]) and included earlier as well as later studies, and also studies of recruitment for mock trials to test recruitment strategies that would otherwise be difficult to compare for equity reasons. Our methods were also more rigorous (with two reviewers examining all titles, abstracts, and relevant papers) with an inclusion criteria targeting recruitment of participants for RCTs only (excluding studies about recruitment to observational studies, questionnaires, health promotional activities. and other health care interventions). We targeted recruitment to RCTs in which recruitment is more difficult because potential participants must consent to participation in research in which their treatment is unknown. The Mapstone study conducted in 2002 and published in 2007 [9] included recruitment for any type of research studies, and the Watson study [10], although targeting recruitment strategies used for RCTs, searched only from 1996 to 2004 with a limited number of electronic databases (without hand searching), using only the keywords “recruitment strategy” or “recruitment strategies.” Our study has identified more studies than the previous reviews (37 compared with 14 and 15 studies), and provides a better understanding of the factors that influence clinical RCT participation for potential participants. Although both previous studies highlighted effective and ineffective strategies, there was no attempt to examine the differences between successful and unsuccessful recruitment strategies.

Our findings are consistent with the health belief model that people are more likely to adopt a health behaviour (such as participation in a clinical study) if they perceive they are at risk of a significant health problem [54]. The importance of informing potential participants about the health problem being studied and engaging them in the learning process is not only educational and constructive, but is also likely to enhance clinical trial participation.

Limitations

Because of major differences in recruitment methods, populations, and types of clinical studies that were recruiting as well as outcomes measured, we did not combine the results statistically in a meta-analysis. In many of the smaller recruitment trials, the failure to find a significant difference in consent rates could be related to the sample size (type II error). There may also be publication bias. However, as more than 70% (27/37) of the included studies had a nonsignificant result, we are hopeful that publication bias may be minimal. Given that the interventions we are considering are of noncommercial value we would suggest that publication bias may be less likely than for other interventions.

The majority of the included trials were conducted in developed countries, with a substantial proportion in the US. We acknowledge that developed countries' health systems may be very different from those of less-developed countries and hence the results of this systematic review may not be generalizable to other countries.

The main limitation of the study, due to the prolonged conduct of the study (from 2000 to 2009), was that the search strategy had to be modified with subsequent search updates owing to changes in MEDLINE Mesh heading definitions. Because of these changes (and the large number of titles and abstracts searched), the reason for exclusion of each study cannot be provided. The abstract of the first version of this systematic review (which included nonrandomised studies owing to the lack of randomised recruitment trials on the subject at the time) was published in conference proceedings in 2002 [11], and a later version that was limited to randomised studies was published in conference proceedings in 2007 [12].

Conclusion

Our systematic review of recruitment strategies for enhancing participation in clinical RCTs has identified a number of effective and ineffective recruitment strategies. Grouped together, the statistically significant strategies either engaged participants in learning about the health problem being studied and its impact on their health or else informed participants of the treatment they have been randomised to receive (nonblinded trial design). However, as there was differential loss to follow up in the different treatment arms with nonblinded trial design, this trial design is likely to jeopardise the validity of the results. The use of monetary incentives may also increase recruitment, but as this was tested in a mock trial, and as another mock trial did not show any difference in consent rates between different amounts of monetary incentives, this finding needs to be interpreted with caution.

Future RCTs of recruitment strategies that engaged participants in the learning process using various methods of delivering the recruitment material compared with standard recruitment may confirm the effectiveness of this concept. This research may be particularly useful for testing strategies that expose large number of potential participants to recruitment information such as interactive internet strategies.

Supporting Information

Zdroje

1. BartonS

2000 Which clinical studies provide the best evidence? The best RCT still trumps the best observational study. BMJ 321 255 256

2. SackettDL

RosenbergWM

GrayJA

HaynesRB

RichardsonWS

1996 Evidence based medicine: what it is and what it isn't. BMJ 312 71 72

3. LovatoLC

HillK

HertertS

HunninghakeDB

ProbstfieldJL

1997 Recruitment for controlled clinical trials: literature summary and annotated bibliography. Control Clin Trials 18 328 352

4. SwansonGM

WardAJ

1995 Recruiting minorities into clinical trials: toward a participant-friendly system. J Natl Cancer Inst 87 1747 1759

5. WalsonPD

1999 Patient recruitment: US perspective. Pediatrics 104 619 622

6. EasterbrookPJ

MatthewsDR

1992 Fate of research studies. J R Soc Med 85 71 76

7. BainesCJ

1984 Impediments to recruitment in the Canadian National Breast Screening Study: response and resolution. Control Clin Trials 5 129 140

8. ZifferblattSM

1975 Recruitment in large-scale clinical trials.

WeissSM

Proceedings of the National Heart and Lung Institute Working Conference on health behavior Bethesda NIH 187 195

9. MapstoneJ

ElbourneD

RobertsI

2007 Strategies to improve recruitment to research studies. Cochrane Database Syst Rev MR000013

10. WatsonJM

TorgersonDJ

2006 Increasing recruitment to randomised trials: a review of randomised controlled trials. BMC Med Res Methodol 6 34

11. CaldwellP

CraigJ

HamiltonS

ButowPN

2002 Strategies for recruitment to RCTs: a systematic review of controlled trials and observational studies. 2002 Oct 21; Sydney, Australia; International Clinical Trials Symposium: improving health care in the new millennium. http://www.ctc.usyd.edu.au/education/4news/Symposium2002_report/caldwell.htm

12. CaldwellP

CraigJ

HamiltonS

ButowPN

2007 Systematic review of strategies for enhancing recruitment of participants for randomised controlled trials. 2007 Sep 24; Sydney, Australia; International Clinical Trials Symposium

13. HigginsJ

GreenS

CochraneC

2008 Cochrane handbook for systematic reviews of interventions Chichester, England Wiley-Blackwell

14. HalpernSD

KarlawishJH

CasarettD

BerlinJA

AschDA

2004 Empirical assessment of whether moderate payments are undue or unjust inducements for participation in clinical trials. Arch Intern Med 164 801 803

15. AvenellA

GrantAM

McGeeM

McPhersonG

CampbellMK

2004 The effects of an open design on trial participant recruitment, compliance and retention–a randomized controlled trial comparison with a blinded, placebo-controlled design. Clin Trials 1 490 498

16. HemminkiE

HoviS-L

VeerusP

SevonT

TuimalaR

2004 Blinding decreased recruitment in a prevention trial of postmenopausal hormone therapy. J Clin Epidemiol 57 1237 1243

17. WeltonAJ

VickersMR

CooperJA

MeadeTW

MarteauTM

1999 Is recruitment more difficult with a placebo arm in randomised controlled trials? A quasirandomised, interview based study. BMJ 318 1114 1117

18. GalloC

PerroneF

De PlacidoS

GiustiC

1995 Informed versus randomised consent to clinical trials. Lancet 346 1060 1064

19. MylesPS

FletcherHE

CairoS

MadderH

McRaeR

1999 Randomized trial of informed consent and recruitment for clinical trials in the immediate preoperative period. Anesthesiology 91 969 978

20. CooperKG

GrantAM

GarrattAM

1997 The impact of using a partially randomised patient preference design when evaluating alternative managements for heavy menstrual bleeding. BJOG 104 1367 1373

21. RogersCG

TysonJE

KennedyKA

BroylesRS

HickmanJF

1998 Conventional consent with opting in versus simplified consent with opting out: an exploratory trial for studies that do not increase patient risk. J Pediatr 132 606 611

22. SimelDL

FeussnerJR

1991 A randomized controlled trial comparing quantitative informed consent formats. J Clin Epidemiol 44 771 777

23. QuinauxE

LienardJL

SlimaniZ

JouhardA

PiedboisP

BuyseM

2003 Impact of monitoring visits on patient recruitment and data quality: case study of a phase IV trial in oncology. Control Clin Trials 24 99S [conference abstract]

24. KimmickGG

PetersonBL

KornblithAB

MandelblattJ

JohnsonJL

2005 Improving accrual of older persons to cancer treatment trials: a randomized trial comparing an educational intervention with standard information: CALGB 360001. J Clin Oncol 23 2201 2207

25. MonaghanH

RichensA

ColmanS

CurrieR

GirgisS

2007 A randomised trial of the effects of an additional communication strategy on recruitment into a large-scale, multi-centre trial. Contemp Clin Trials 28 1 5

26. LarkeyLK

StatenLK

RitenbaughC

HallRA

BullerDB

2002 Recruitment of Hispanic women to the Women's Health Initiative: the case of Embajadoras in Arizona. Control Clin Trials 23 289 298

27. FleissigA

JenkinsV

FallowfieldL

2001 Results of an intervention study to improve communication about randomised clinical trials of cancer therapy. Eur J Cancer 37 322 331

28. DonovanJL

PetersTJ

NobleS

PowellP

GillattD

2003 Who can best recruit to randomized trials? Randomized trial comparing surgeons and nurses recruiting patients to a trial of treatments for localized prostate cancer (the ProtecT study). J Clin Epidemiol 56 605 609

29. MillerNL

MarkowitzJC

KocsisJH

LeonAC

BriscoST

1999 Cost effectiveness of screening for clinical trials by research assistants versus senior investigators. J Psychiatr Res 33 81 85

30. LitchfieldJ

FreemanJ

SchouH

ElsleyM

FullerR

2005 Is the future for clinical trials internet-based? A cluster randomized clinical trial. Clin Trials 2 72 79

31. MartinsonBC

LazovichD

LandoHA

PerryCL

McGovernPG

2000 Effectiveness of monetary incentives for recruiting adolescents to an intervention trial to reduce smoking. Prev Med 31 706 713

32. KendrickD

WatsonM

DeweyM

WoodsAJ

2001 Does sending a home safety questionnaire increase recruitment to an injury prevention trial? A randomised controlled trial. J Epidemiol Community Health 55 845 846

33. KiernanM

PhillipsK

FairJM

KingAC

2000 Using direct mail to recruit Hispanic adults into a dietary intervention: an experimental study. Ann Behav Med 22 89 93

34. TworogerSS

YasuiY

UlrichCM

NakamuraH

LaCroixK

2002 Mailing strategies and recruitment into an intervention trial of the exercise effect on breast cancer biomarkers. Cancer Epidemiol Biomarkers Prev 11 73 77

35. ValanisB

BlankJ

GlassA

1998 Mailing strategies and costs of recruiting heavy smokers in CARET, a large chemoprevention trial. Control Clin Trials 19 25 38

36. NystuenP

HagenKB

2004 Telephone reminders are effective in recruiting nonresponding patients to randomized controlled trials. J Clin Epidemiol 57 773 776

37. FordME

HavstadSL

DavisSD

2004 A randomized trial of recruitment methods for older African American men in the Prostate, Lung, Colorectal and Ovarian (PLCO) Cancer Screening Trial. Clin Trials 1 343 351

38. LeiraEC

AhmedA

LambDL

OlaldeHM

CallisonRC

2009 Extending acute trials to remote populations a pilot study during interhospital helicopter transfer. Stroke 40 895 901

39. CoyneCA

XuR

RaichP

PlomerK

DignanM

2003 Randomized, controlled trial of an easy-to-read informed consent statement for clinical trial participation: a study of the Eastern Cooperative Oncology Group. J Clin Oncol 21 836 842

40. EllisPM

ButowPN

TattersallMH

2002 Informing breast cancer patients about clinical trials: a randomized clinical trial of an educational booklet. Ann Oncol 13 1414 1423

41. DuW

MoodD

GadgeelS

SimonMS

2008 An educational video to increase clinical trials enrollment among lung cancer patients. J Thorac Oncol 3 23 29

42. HutchisonC

CowanC

McMahonT

PaulJ

2007 A randomised controlled study of an audiovisual patient information intervention on informed consent and recruitment to cancer clinical trials. Br J Cancer 97 705 711

43. Llewellyn-ThomasHA

ThielEC

SemFW

WoermkeDE

1995 Presenting clinical trial information: a comparison of methods. Patient Educ Couns 25 97 107

44. WestonJ

HannahM

DownesJ

1997 Evaluating the benefits of a patient information video during the informed consent process. Patient Educ Couns 30 239 245

45. BernerES

PartridgeEE

BaumSK

1997 The effects of the pdq patient information file (pif) on patients' knowledge, enrollment in clinical trials, and satisfaction. J Cancer Educ 12 121 125

46. WadlandWC

HughesJR

Secker-WalkerRH

BronsonDL

FenwickJ

1990 Recruitment in a primary care trial on smoking cessation. Fam Med 22 201 204

47. AaronsonNK

Visser-PolE

LeenhoutsGH

MullerMJ

van der SchotAC

1996 Telephone-based nursing intervention improves the effectiveness of the informed consent process in cancer clinical trials. J Clin Oncol 14 984 996

48. MandelblattJ

KaufmanE

SheppardVB

PomeroyJ

KavanaughJ

2005 Breast cancer prevention in community clinics: Will low-income Latina patients participate in clinical trials? Prev Med 40 611 612

49. SimesRJ

TattersallMH

CoatesAS

RaghavanD

SolomonHJ

1986 Randomised comparison of procedures for obtaining informed consent in clinical trials of treatment for cancer. Br Med J (Clin Res Ed) 293 1065 1068

50. WraggJA

RobinsonEJ

LilfordRJ

2000 Information presentation and decisions to enter clinical trials: a hypothetical trial of hormone replacement therapy. Soc Sci Med 51 453 462

51. PighillsA

TorgensonDJ

SheldonT

2009 Publicity does not increase recruitment to falls prevention trials: the results of two quasi-randomized trials. J Clin Epidemiol 62 1332 1335

52. LeaderMA

NeuwirthE

1978 Clinical research and the noninstitutional elderly: a model for subject recruitment. Journal J Am Geriatr Soc 26 27 31

53. CaldwellPH

ButowPN

CraigJC

2003 Parents' attitudes to children's participation in randomized controlled trials. J Pediatr 142 554 559

54. BeckerMH

1974 The health belief model and personal health behavior. Health Educ Monogr 2

Štítky

Interní lékařství

Článek Water Supply and HealthČlánek Sanitation and Health

Článek vyšel v časopisePLOS Medicine

Nejčtenější tento týden

2010 Číslo 11- Není statin jako statin aneb praktický přehled rozdílů jednotlivých molekul

- Magnosolv a jeho využití v neurologii

- Moje zkušenosti s Magnosolvem podávaným pacientům jako profylaxe migrény a u pacientů s diagnostikovanou spazmofilní tetanií i při normomagnezémii - MUDr. Dana Pecharová, neurolog

- Biomarker NT-proBNP má v praxi široké využití. Usnadněte si jeho vyšetření POCT analyzátorem Afias 1

- S prof. Vladimírem Paličkou o racionální suplementaci kalcia a vitaminu D v každodenní praxi

-

Všechny články tohoto čísla

- Why Do Evaluations of eHealth Programs Fail? An Alternative Set of Guiding Principles

- Strategies for Increasing Recruitment to Randomised Controlled Trials: Systematic Review

- Water Supply and Health

- Prescription Medicines and the Risk of Road Traffic Crashes: A French Registry-Based Study

- Air Pollution and the Microvasculature: A Cross-Sectional Assessment of In Vivo Retinal Images in the Population-Based Multi-Ethnic Study of Atherosclerosis (MESA)

- Can We Count on Global Health Estimates?

- Production and Analysis of Health Indicators: The Role of Academia

- WHO and Global Health Monitoring: The Way Forward

- Global Health Estimates: Stronger Collaboration Needed with Low- and Middle-Income Countries

- Sanitation and Health

- Combining Domestic and Foreign Investment to Expand Tuberculosis Control in China

- Colorectal Cancer Screening for Average-Risk North Americans: An Economic Evaluation

- Efficacy of Oseltamivir-Zanamivir Combination Compared to Each Monotherapy for Seasonal Influenza: A Randomized Placebo-Controlled Trial

- Road Trauma in Teenage Male Youth with Childhood Disruptive Behavior Disorders: A Population Based Analysis

- A Call for Responsible Estimation of Global Health

- Hygiene, Sanitation, and Water: What Needs to Be Done?

- Defining Research to Improve Health Systems

- Which Path to Universal Health Coverage? Perspectives on the World Health Report 2010

- Doctors and Drug Companies: Still Cozy after All These Years

- Hygiene, Sanitation, and Water: Forgotten Foundations of Health

- The Imperfect World of Global Health Estimates

- PLOS Medicine

- Archiv čísel

- Aktuální číslo

- Informace o časopisu

Nejčtenější v tomto čísle- Efficacy of Oseltamivir-Zanamivir Combination Compared to Each Monotherapy for Seasonal Influenza: A Randomized Placebo-Controlled Trial

- Doctors and Drug Companies: Still Cozy after All These Years

- Strategies for Increasing Recruitment to Randomised Controlled Trials: Systematic Review

- Prescription Medicines and the Risk of Road Traffic Crashes: A French Registry-Based Study

Kurzy

Zvyšte si kvalifikaci online z pohodlí domova

Autoři: prof. MUDr. Vladimír Palička, CSc., Dr.h.c., doc. MUDr. Václav Vyskočil, Ph.D., MUDr. Petr Kasalický, CSc., MUDr. Jan Rosa, Ing. Pavel Havlík, Ing. Jan Adam, Hana Hejnová, DiS., Jana Křenková

Autoři: MUDr. Irena Krčmová, CSc.

Autoři: MDDr. Eleonóra Ivančová, PhD., MHA

Autoři: prof. MUDr. Eva Kubala Havrdová, DrSc.

Všechny kurzyPřihlášení#ADS_BOTTOM_SCRIPTS#Zapomenuté hesloZadejte e-mailovou adresu, se kterou jste vytvářel(a) účet, budou Vám na ni zaslány informace k nastavení nového hesla.

- Vzdělávání