-

Články

- Vzdělávání

- Časopisy

Top články

Nové číslo

- Témata

- Kongresy

- Videa

- Podcasty

Nové podcasty

Reklama- Kariéra

Doporučené pozice

Reklama- Praxe

Use of Expert Panels to Define the Reference Standard in Diagnostic Research: A Systematic Review of Published Methods and Reporting

Background:

In diagnostic studies, a single and error-free test that can be used as the reference (gold) standard often does not exist. One solution is the use of panel diagnosis, i.e., a group of experts who assess the results from multiple tests to reach a final diagnosis in each patient. Although panel diagnosis, also known as consensus or expert diagnosis, is frequently used as the reference standard, guidance on preferred methodology is lacking. The aim of this study is to provide an overview of methods used in panel diagnoses and to provide initial guidance on the use and reporting of panel diagnosis as reference standard.Methods and Findings:

PubMed was systematically searched for diagnostic studies applying a panel diagnosis as reference standard published up to May 31, 2012. We included diagnostic studies in which the final diagnosis was made by two or more persons based on results from multiple tests. General study characteristics and details of panel methodology were extracted. Eighty-one studies were included, of which most reported on psychiatry (37%) and cardiovascular (21%) diseases. Data extraction was hampered by incomplete reporting; one or more pieces of critical information about panel reference standard methodology was missing in 83% of studies. In most studies (75%), the panel consisted of three or fewer members. Panel members were blinded to the results of the index test results in 31% of studies. Reproducibility of the decision process was assessed in 17 (21%) studies. Reported details on panel constitution, information for diagnosis and methods of decision making varied considerably between studies.Conclusions:

Methods of panel diagnosis varied substantially across studies and many aspects of the procedure were either unclear or not reported. On the basis of our review, we identified areas for improvement and developed a checklist and flow chart for initial guidance for researchers conducting and reporting of studies involving panel diagnosis.

Please see later in the article for the Editors' Summary

Published in the journal: . PLoS Med 10(10): e32767. doi:10.1371/journal.pmed.1001531

Category: Research Article

doi: https://doi.org/10.1371/journal.pmed.1001531Summary

Background:

In diagnostic studies, a single and error-free test that can be used as the reference (gold) standard often does not exist. One solution is the use of panel diagnosis, i.e., a group of experts who assess the results from multiple tests to reach a final diagnosis in each patient. Although panel diagnosis, also known as consensus or expert diagnosis, is frequently used as the reference standard, guidance on preferred methodology is lacking. The aim of this study is to provide an overview of methods used in panel diagnoses and to provide initial guidance on the use and reporting of panel diagnosis as reference standard.Methods and Findings:

PubMed was systematically searched for diagnostic studies applying a panel diagnosis as reference standard published up to May 31, 2012. We included diagnostic studies in which the final diagnosis was made by two or more persons based on results from multiple tests. General study characteristics and details of panel methodology were extracted. Eighty-one studies were included, of which most reported on psychiatry (37%) and cardiovascular (21%) diseases. Data extraction was hampered by incomplete reporting; one or more pieces of critical information about panel reference standard methodology was missing in 83% of studies. In most studies (75%), the panel consisted of three or fewer members. Panel members were blinded to the results of the index test results in 31% of studies. Reproducibility of the decision process was assessed in 17 (21%) studies. Reported details on panel constitution, information for diagnosis and methods of decision making varied considerably between studies.Conclusions:

Methods of panel diagnosis varied substantially across studies and many aspects of the procedure were either unclear or not reported. On the basis of our review, we identified areas for improvement and developed a checklist and flow chart for initial guidance for researchers conducting and reporting of studies involving panel diagnosis.

Please see later in the article for the Editors' SummaryIntroduction

Different types of diagnostic studies, e.g., studies assessing the diagnostic accuracy of a single test or developing a multivariable diagnostic model, all face the key challenge of obtaining the correct final diagnosis in each subject. A final diagnosis is necessary to calculate the accuracy measures of the diagnostic test(s) or model(s) under study. Ideally, a single reference test to classify the condition of interest is preferred. For most conditions, however, such a single and error-free test, also known as a reference or “gold” standard, is not available [1]. This is problematic, as errors in the final disease classification can seriously bias the results [1],[2].

One strategy to overcome the lack of a single, imperfect reference test is to use multiple pieces of information to improve classification of the presence or absence of the disease. Several methods for utilizing multiple test results exist. These include so-called composite reference standards in which a predefined rule is used to combine different test results into a reference standard (for example, the combination of culture and PCR for the detection of infectious diseases) [3]; latent class analysis, where the multiple test results are modeled as functions of the unknown (or latent) disease status (for example, in the evaluation of the clinical accuracy in tests for pertussis) [4],[5]; and a so-called panel diagnosis, in which a group of experts determine the final diagnosis in each patient on the basis of all available relevant patient data (for example, often used in studies on heart failure) [1],[6].

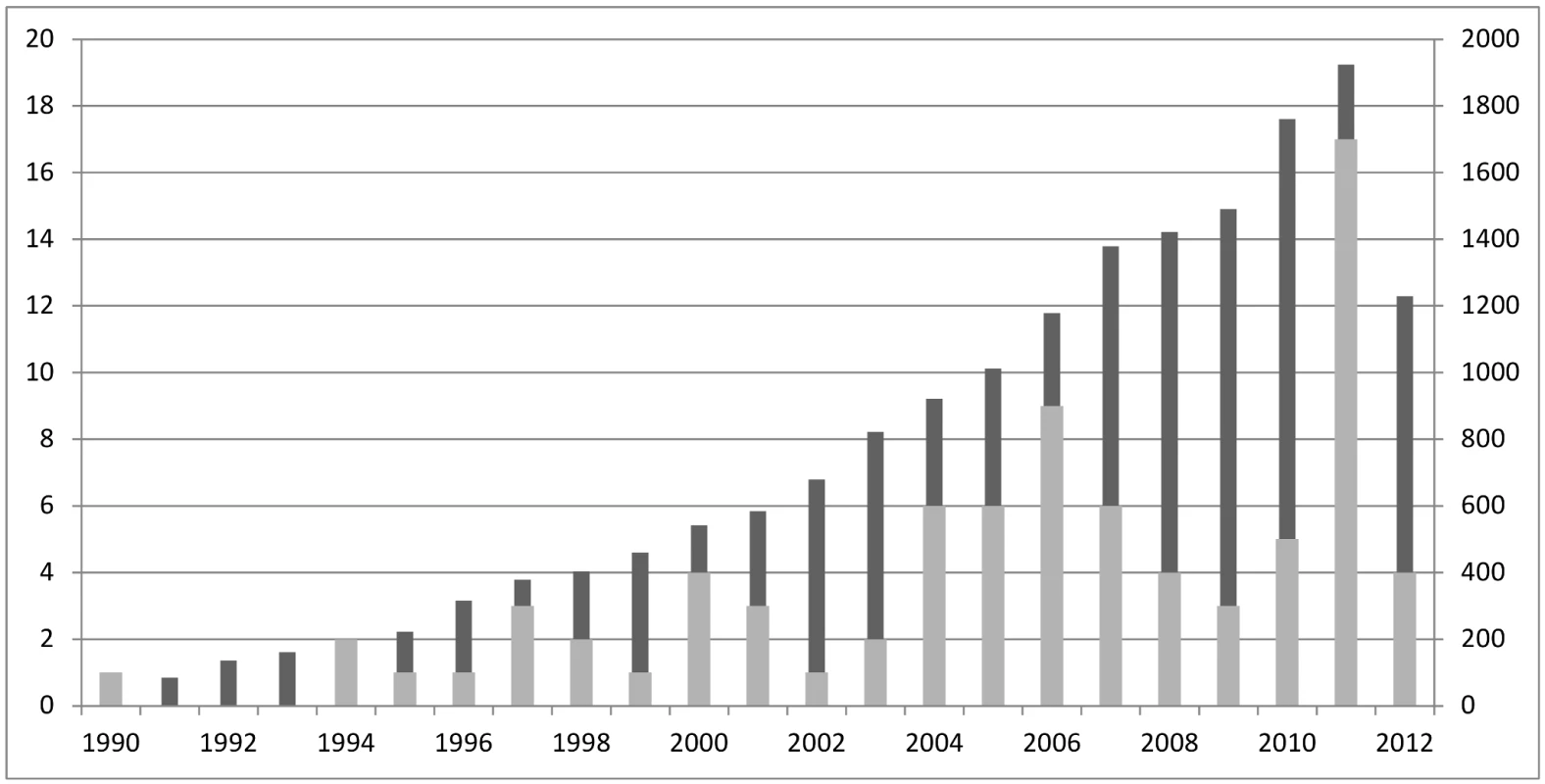

In this review, we focus on panel diagnosis because its use appears to be increasing (Figure 1) and no formal guidance exists on the execution and reporting of this type of reference standard. Although terms like “consensus diagnosis” and “expert panel diagnosis” are also often used, we will use the more uniform term “panel diagnosis.” As a panel diagnosis largely resembles clinical practice in that multiple test results are assessed simultaneously by a clinician [7], it seems an acceptable method for obtaining a final diagnosis when a single gold standard test is lacking. Nonetheless, there are various ways to perform a panel diagnosis. These variations could arise from the chosen panel constitution and the methods applied to reach the decisions on the presence or absence of the target disease. Unfortunately, there is neither theoretical evidence, nor practical guidance on the preferred methodology to conduct panel diagnoses.

Fig. 1. Distribution of search results over time.

Dark grey columns represent the number of articles found with the search strategy, numbers displayed on right y-axis; light grey columns represent the articles included in the review after full text reading, numbers displayed on left y-axis. We performed a systematic review on reported panel diagnosis methodology to address the following aims: (1) To describe the variation in methods applied in published studies using a panel diagnosis; (2) To assess the quality of reporting of the methods related to the panel diagnosis process in these studies; (3) To provide initial guidance for researchers reporting an existing study or designing a new study involving a panel diagnosis.

Methods

We performed our review in accordance to PRISMA guidelines for systematic reviews [8], but as methodological reviews differ from systematic reviews in several ways [9], not all items were applicable.

Search and Inclusion Criteria

A PubMed search for articles on diagnostic studies using expert panels or consensus methods as final diagnosis was performed from its inception up to May 2012 by one of the authors (LCMB). The search strategy was explicitly very broad in order not to miss any relevant articles because of terminology used. The strategy included ([diagnosis] AND ([expert panel] OR [consensus methods] OR [consensus diagnosis])). The search was limited to studies in humans, and written in English. Because of theoretical saturation [9], meaning that additional searches will only add papers without adding information, we only performed the search in the largest electronic medical database (PubMed) and did not update the search beyond May 2012.

Studies had to meet three criteria to be included in the analysis: (1) The study was diagnostic, including studies on prevalence of the condition of interest, diagnostic accuracy, and multivariable (diagnostic) prediction models. (2) The reference standard used was based on the results of multiple tests, which were interpreted by multiple experts (two or more) to make a final diagnosis. (3) The study was an original report, excluding letters, editorials, case-reports, commentaries, and reviews.

Data Extraction

Title and abstracts from the articles retrieved by the database search were screened and selected by LCMB for eligibility and identification for full-text reading. Articles were considered eligible for full-text reading when the abstract included clues that a panel diagnosis might have been used as reference standard. Full texts of the identified articles were read and the data-extraction form was completed by two observers in an independent (blinded) way (LCMB read and scored all articles and BDLB acted as the second reviewer in 120 articles and JBR in 64 articles).

The data extraction form (Protocol S1) was developed, piloted, and updated by LCMB, BDLB, and JBR and inspired by the STAndards for the Reporting of Diagnostic accuracy studies (STARD) guideline [10] and QUADAS-2 tool [11]. It was designed to collect descriptive information on how individual studies implemented the panel approach in their study and to collect normative information on the completeness of the reported methods (information levels A and B). General items about study aim(s), target disease(s), and reported reason(s) why a single reference standard was considered not appropriate were extracted. Detailed information on the methods used for panel diagnosis was also extracted, including: panel constitution, process of decision making, available tests results for the panel, blinding to the results of one of more tests, reproducibility of the panel diagnosis, and reported strengths and limitations of panel diagnosis. Discrepancies were resolved by discussion between the two reviewers. A formal level of agreement between the reviewers was not assessed. In only one paper agreement could not be reached between the two reviewers, and a third reviewer (JBR) was consulted.

Results

Search and General Study Characteristics

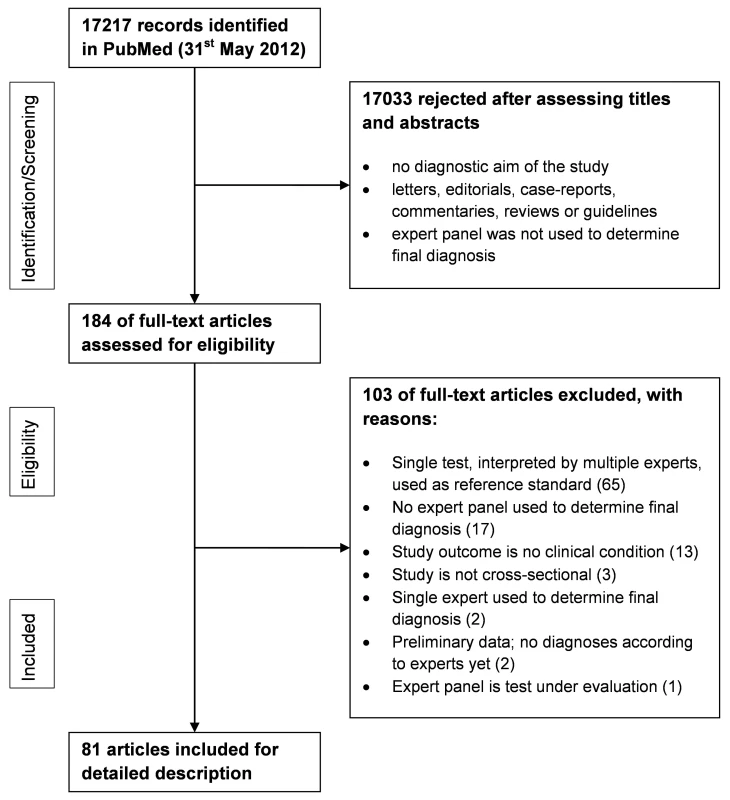

The search yielded 17,217 potentially eligible articles on May 31, 2012. Applying the inclusion criteria to the abstracts reduced the number of papers to 184. Of these 184 articles, the full texts were retrieved and independently judged by two reviewers. Applying the inclusion criteria to the full texts resulted in 81 included articles to address objectives 1 and 2 (Figure 2). An overall quality assessment like QUADAS-2 [11] was not performed, but relevant items, such as if each patient received the final diagnosis in the same way, are included in the results.

Fig. 2. PRISMA flowchart of the selection of relevant papers.

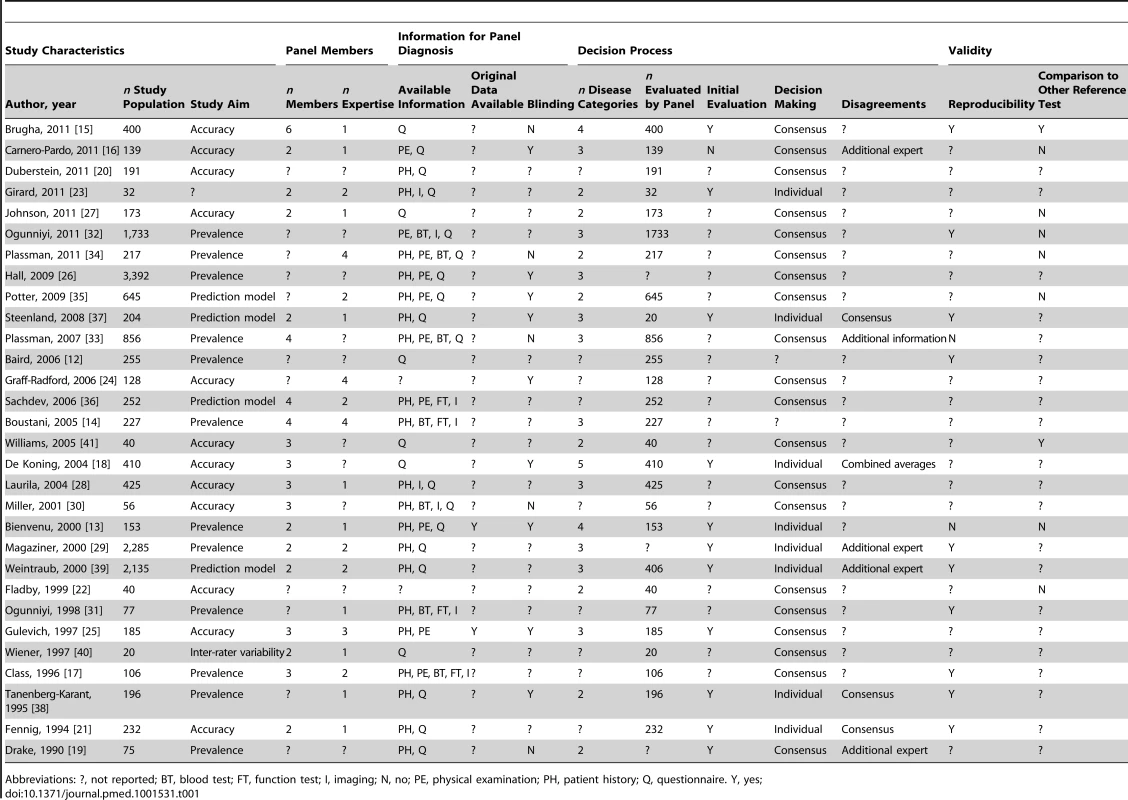

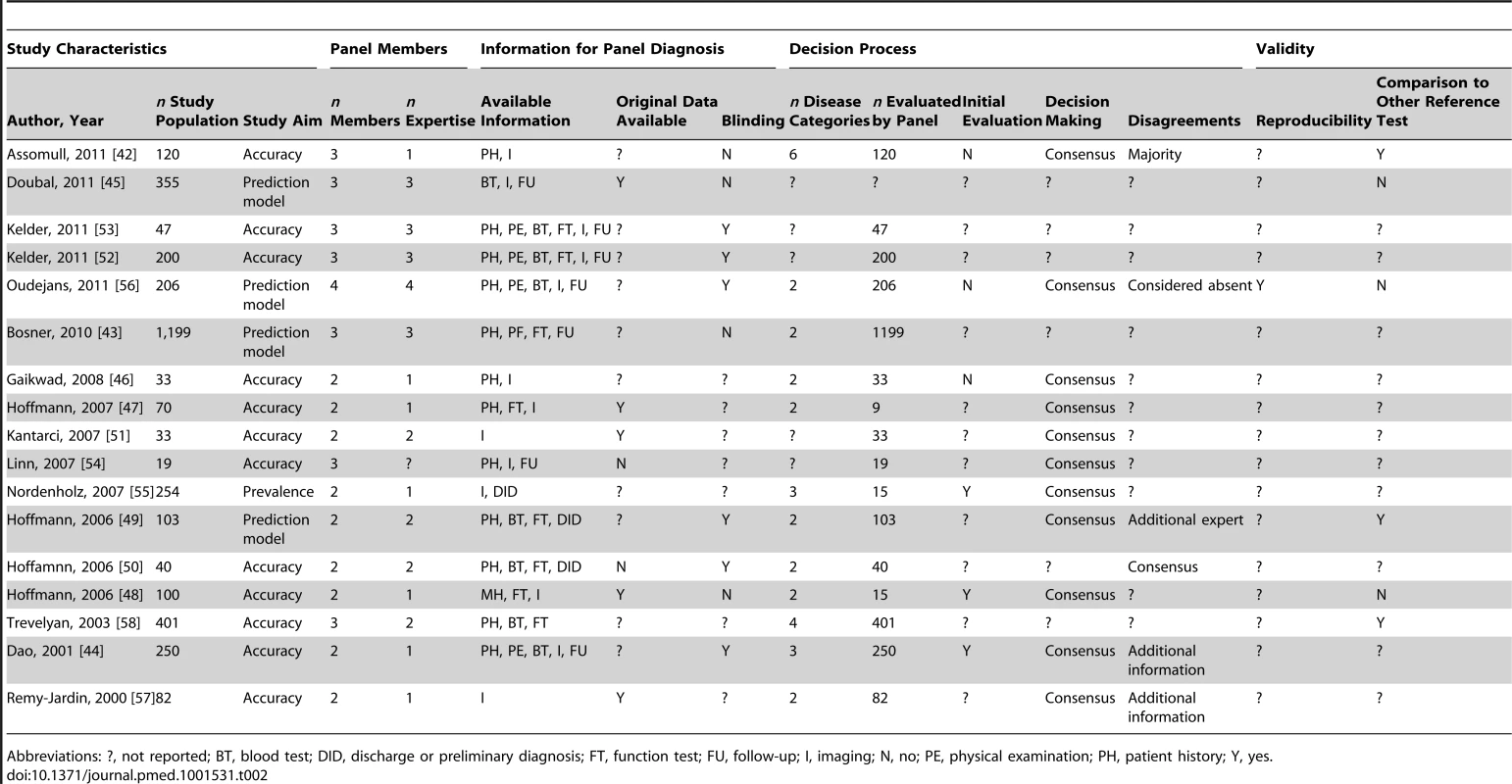

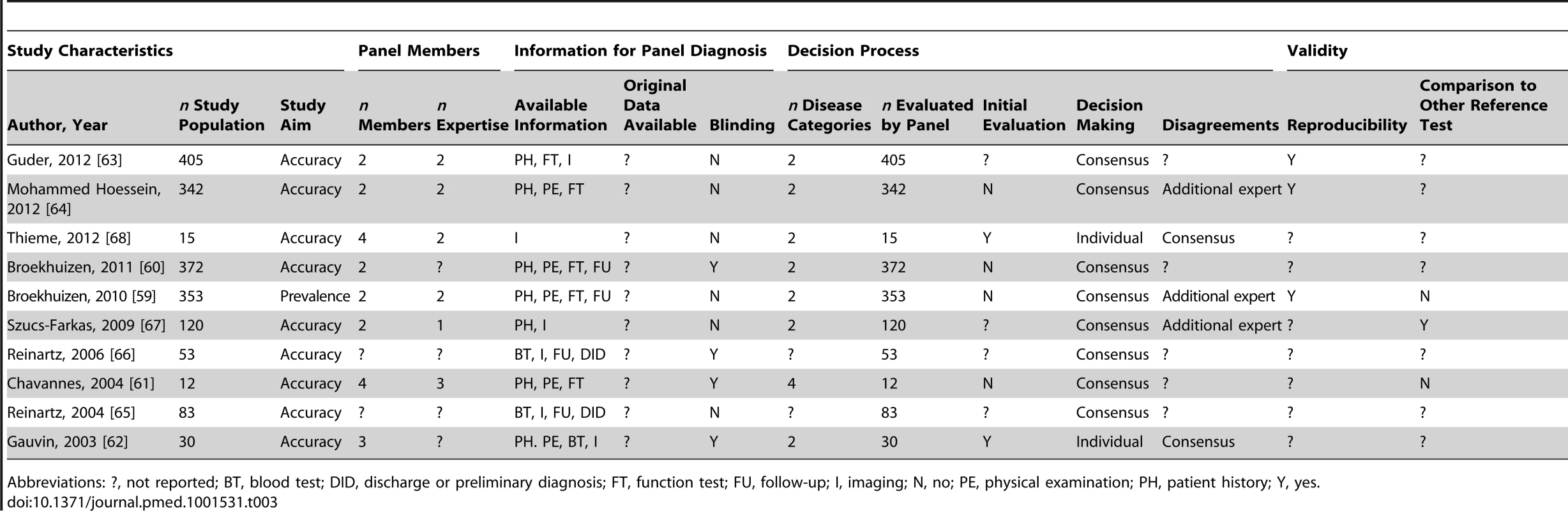

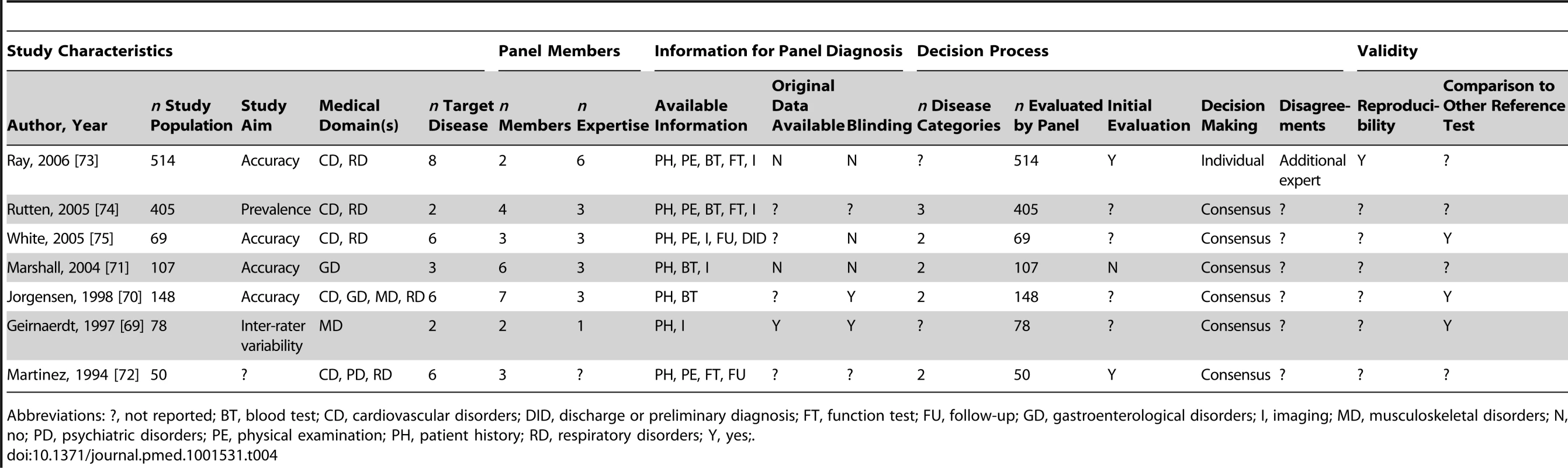

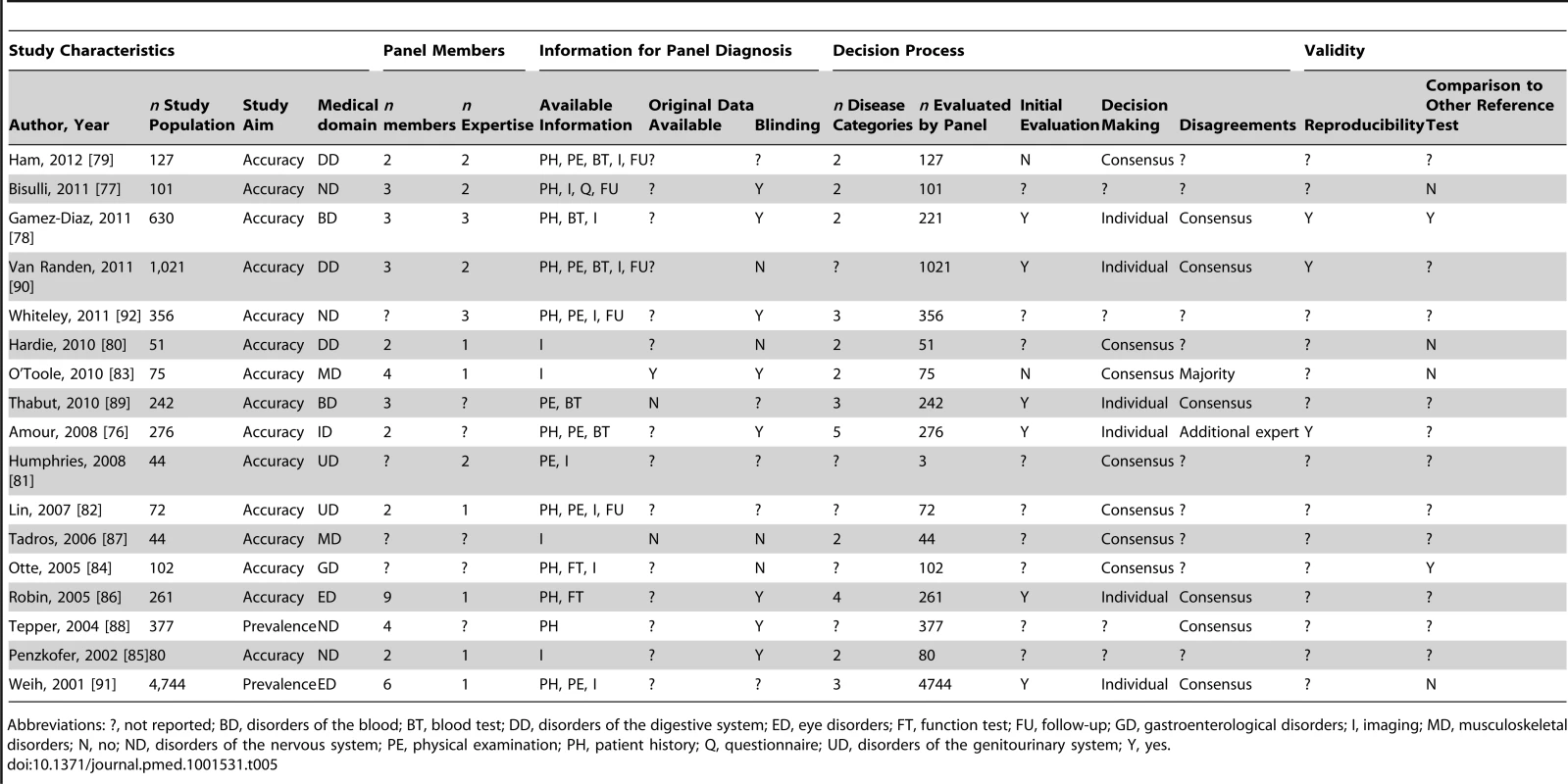

Panel diagnosis was used in a broad spectrum of medical domains, but predominantly in the field of psychiatric disorders (30 of 81 papers, 37%), half of which pertained to dementia; cardiovascular diseases (17 papers, 21%); and respiratory disorders (ten papers, 12%). In seven studies (9%), the presence or absence of multiple diseases was assessed by the panel. Study characteristics are summarized in Tables 1–5 by medical domain: Table 1 for psychiatric disorders [12]–[41], Table 2 for cardiovascular disorders [42]–[58], Table 3 for respiratory disorders [59]–[68], Table 4 for studies with multiple target diseases [69]–[75], and Table 5 for diseases from other medical domains [76]–[92]. The median number of patients undergoing panel assessment of the included studies was 153 with a range of 12 to 4,474 patients.

Tab. 1. Study characteristics of articles assessing psychiatric disorders, n = 30.

Abbreviations: ?, not reported; BT, blood test; FT, function test; I, imaging; N, no; PE, physical examination; PH, patient history; Q, questionnaire. Y, yes; Tab. 2. Study characteristics of articles assessing cardiovascular disease, n = 17.

Abbreviations: ?, not reported; BT, blood test; DID, discharge or preliminary diagnosis; FT, function test; FU, follow-up; I, imaging; N, no; PE, physical examination; PH, patient history; Y, yes. Tab. 3. Study characteristics of articles assessing respiratory disorders, n = 10.

Abbreviations: ?, not reported; BT, blood test; DID, discharge or preliminary diagnosis; FT, function test; FU, follow-up; I, imaging; N, no; PE, physical examination; PH, patient history; Y, yes. Tab. 4. Study characteristics of articles assessing multiple diseases, n = 7.

Abbreviations: ?, not reported; BT, blood test; CD, cardiovascular disorders; DID, discharge or preliminary diagnosis; FT, function test; FU, follow-up; GD, gastroenterological disorders; I, imaging; MD, musculoskeletal disorders; N, no; PD, psychiatric disorders; PE, physical examination; PH, patient history; RD, respiratory disorders; Y, yes;. Tab. 5. Study characteristics of articles assessing diseases from other medical domains, n = 17.

Abbreviations: ?, not reported; BD, disorders of the blood; BT, blood test; DD, disorders of the digestive system; ED, eye disorders; FT, function test; FU, follow-up; GD, gastroenterological disorders; I, imaging; MD, musculoskeletal disorders; N, no; ND, disorders of the nervous system; PE, physical examination; PH, patient history; Q, questionnaire; UD, disorders of the genitourinary system; Y, yes. The study aim of most papers (52 of 81 papers, 64%) was to assess the accuracy of one or more diagnostic tests. In 17 studies (21%) the aim was to determine the prevalence of a particular disease, and in seven studies the aim was to develop a multivariable diagnostic prediction model. In two articles (2%) the study aim remained unclear.

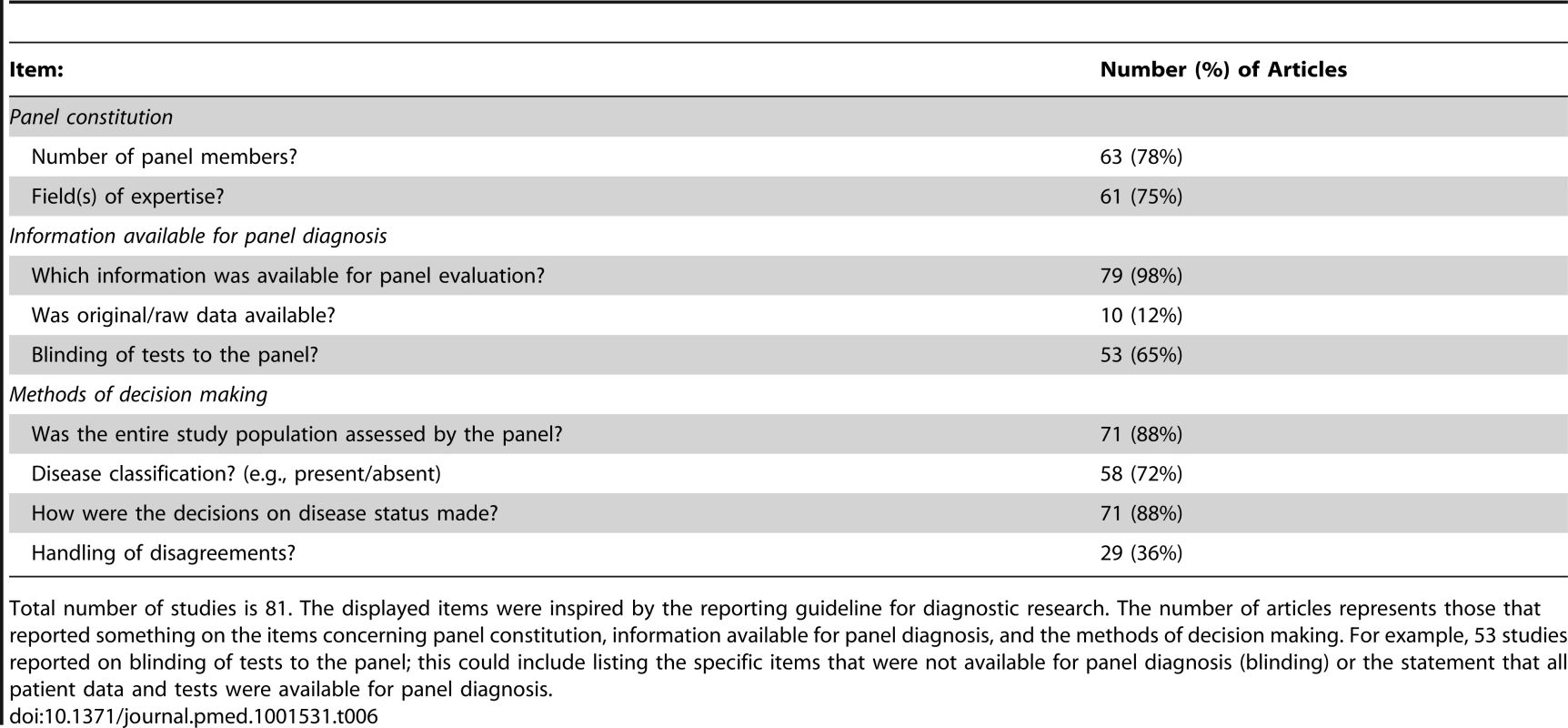

Completeness of Reporting

Table 6 displays the proportion of articles that reported on different items related to panel constitution, information available for panel evaluation, and methods of decision making. Incomplete reporting was a common finding: information on panel constitution was missing in 20 (25%) studies, information on tests result presented to the panel was missing in 28 (35%) studies, and information about the decision process within the panel was incomplete in 56 (69%) studies. Overall, key information on panel methodology, related to STARD items [10] on the reference standard, was incomplete in 67 (83%) of the 81 included studies.

Tab. 6. The proportion of articles that reported on items related to panel constitution, information available and methods of decision making.

Total number of studies is 81. The displayed items were inspired by the reporting guideline for diagnostic research. The number of articles represents those that reported something on the items concerning panel constitution, information available for panel diagnosis, and the methods of decision making. For example, 53 studies reported on blinding of tests to the panel; this could include listing the specific items that were not available for panel diagnosis (blinding) or the statement that all patient data and tests were available for panel diagnosis. Variation in Methodology across Studies

Panel constitution

Most panels used two members (29 of 63 papers, 46%), followed by three members (18 of 63 papers, 29%). The maximum reported number of members was nine. Different fields of expertise of the panel members were represented in the majority of studies (37 of 61 papers, 61%), with a maximum of six different fields of expertise.

Available information for panel diagnosis

Items from patient history and/or physical examination were used by the panel in 80% of the studies (63 out of 79 articles; two articles did not report on this item). Imaging results were also frequently used (43 of 79 articles, 54%). Blood tests, questionnaires, and function tests (such as spirometry) were each used for evaluation by the panel in 30% of studies (24 out of 79 studies). Information collected during follow-up was used by the panel in 21 studies (27% of 79 studies) and discharge or preliminary diagnoses of the treating physician were also presented to the panel in six studies.

Format of presentation to the panel

In 79 of the 81 articles, the available information was presented to the members as paper-based summaries. In nine (11%) of the 81 included studies, test results were also presented in their original (raw) form, such as original radiographic images.

In 32 papers (60% of 53 papers), panel members were blinded (i.e., results were withheld) to one or more test results. For most of these studies (23 of 32 studies), the members were blinded to the results of a specific index test under study. Two studies used staged unblinding of the test results, in which the diagnosis was assigned twice by the panel, first on all data but without the results of the index test and later including the index test results. The other 21 articles reported that all available patient data was included for panel diagnosis.

Decision-making process by the panel

The final diagnosis was determined only as “target disease present or absent” in the majority (33 of 58 studies; 57%) of studies. In the other 25 studies, multiple categories of estimated certainty for disease classification were used, with a maximum of six categories.

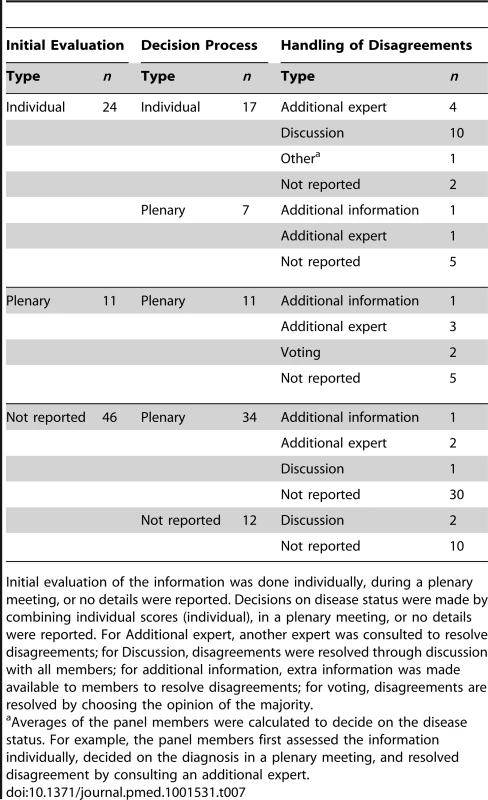

We observed many combinations of initial evaluation of the information by the panel members (individual or plenary), method of decision making by the panel, and how they handled disagreements across the panel members during the process of reaching a decision on the presence/absence of the target disease (Table 7). A plenary decision process was more frequently used than combining individual panel members' assessments into a majority decision (51 versus 17 studies).

Tab. 7. Observed combinations of the decision process used in the reviewed articles.

Initial evaluation of the information was done individually, during a plenary meeting, or no details were reported. Decisions on disease status were made by combining individual scores (individual), in a plenary meeting, or no details were reported. For Additional expert, another expert was consulted to resolve disagreements; for Discussion, disagreements were resolved through discussion with all members; for additional information, extra information was made available to members to resolve disagreements; for voting, disagreements are resolved by choosing the opinion of the majority. In 22 studies (31% of 71 articles), only a subgroup of patients was assessed by the entire panel. This subgroup often consisted of patients who were difficult to diagnose by individual assessment by the panel members (16 of these 22 studies). A pre-specified decision rule to select such subgroups of patients was applied in three papers; two studies used disagreement between multiple index-tests to identify the patients for panel assessment and another study defined subgroups for panel assessment on the basis of the information available per patient.

Validity of panel diagnosis

Twenty-seven papers reported the reproducibility of the panel diagnosis in their study. Kappa statistics or agreement percentages were reported in 17 articles (21% of 81 articles), of which seven studies evaluated the plenary decision process and ten studies reported the reproducibility of the individual assessments.

In addition to the panel diagnosis, ten studies (12% of 81 studies) also applied alternative methods to diagnose the target disease for comparison. These methods included diagnosis according to a combination of tests (four studies), comparison to clinical follow-up (four studies), a pre-specified decision rule (one study), and a single gold standard applied only to a subgroup of patients (one study).

Discussion

Our review on the use of panel diagnoses as reference standard in diagnostic studies reveals that panel diagnoses were mainly used in studies on psychiatric, cardiovascular, or respiratory conditions. Non-reporting of the panel methodology applied was frequent as 83% of all included studies did not report on all relevant items used in methods of the panel diagnosis necessary to replicate the study. The panel constitution and decision process differed substantially between studies, ranging from two to nine panel members, with large variations in the types of expertise represented in the panel. We found 17 different combinations of the three stages in the decision-making process as displayed in Table 7.

Complete and accurate reporting is a prerequisite for judging potential bias in a study and for allowing readers to apply the same study methods. In total, only 14 (17%) papers reported complete data on key issues such as the panel constitution, the information presented to the panel, and the exact decision process to determine the final diagnosis. This under - or even non-reporting shows that the standard of reporting of diagnostic studies should be improved. The STARD reporting guideline for diagnostic studies [10] does not include specific items on the use of panel diagnosis as reference standard. However, contrary to what one would expect, the completeness and thoroughness of reporting did not improve with time despite the publication of reporting guidelines in diagnostic research. Another problem we encountered in this review was unclear terminology. For example, the term “experts” was often used to describe the panel members. Yet little to no information was given to substantiate this claim, for instance by reporting on profession, expertise, or years of experience, and familiarity with the target disease or population of interest. Another ambiguous term was “consensus diagnosis.” It was often unclear whether the term consensus diagnosis was simply used as a synonym for panel diagnosis or whether it referred to a specific way of reaching agreement on the final diagnosis or target disease presence or absence among the panel members. Therefore, the term consensus diagnosis alone is not sufficient to describe the details of the reference standard. For example, instead of “the diagnosis was assigned in consensus,” it is more informative to describe the decision process as “the diagnosis was assigned in consensus after a group discussion.”

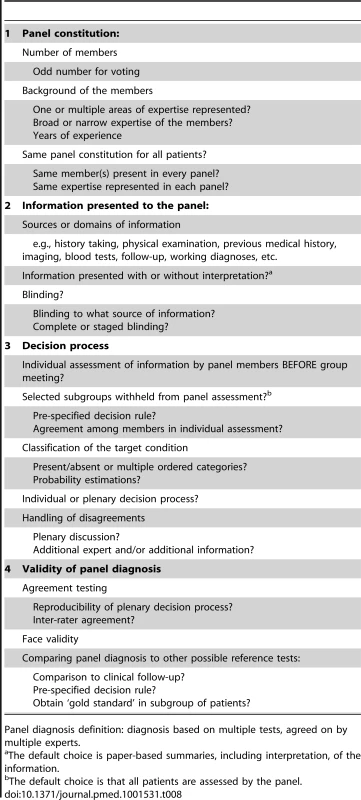

We used the key concept that reporting of research should enable replication. We therefore grouped items into four key domains: panel constitution, information presented to the panel, the decision process, and validity of the panel procedure. Using these four domains as guidance for reporting on the panel approach will aid replication of the study by others.

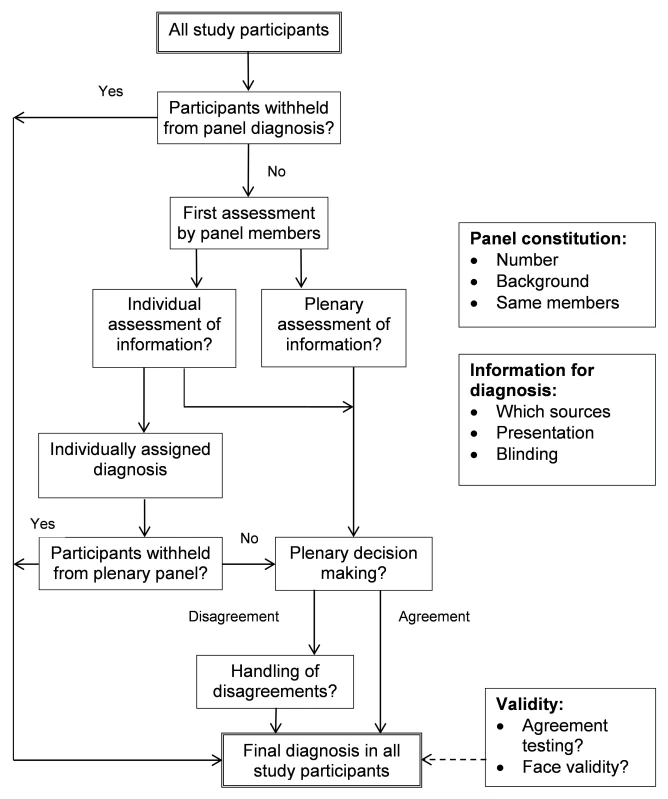

In Figure 3 and Table 8 we identify the various choices and decisions to be made before initiating a diagnostic study with panel diagnosis. We hope to encourage researchers to formally discuss these options when designing a new study rather than copying an approach from an existing study. Below, we discuss the options within each key domain based on the findings of our systematic review, supplemented by our experience (Figure 3; Table 8). We discuss these items in a cautious way as limited evidence or consensus exist on what should be considered preferred methodology for conducting a panel diagnosis. Further research into each of the decision we have identified is needed.

Fig. 3. Flowchart of options to consider when planning and conducting panel diagnosis.

Tab. 8. Options to consider when reporting or designing a study using a panel diagnosis as reference standard.

Panel diagnosis definition: diagnosis based on multiple tests, agreed on by multiple experts. Panel Constitution

Ideally, the same members should assess all patients to increase the reproducibility of the decision process. However, when this is not feasible, researchers can choose to have a particular member or a certain expertise to be present in each panel to help maintain a certain level of consistency. When voting is part of the decision process, an odd number of panel members should be considered. In the vast majority of studies, the panel consisted of three or fewer members, which seems low since the reason for using a panel diagnosis is that the final disease classification is not straightforward. Having more members is beneficial in avoiding incorrect decisions on the final diagnosis [93]. With the choice of panel members, one should consider whether all areas of expertise relevant to the target disease(s) are represented. While whether someone can be considered an expert is more or less subjective, reporting the area of expertise and the years of experience, as often done in inter-rater studies in imaging, provides useful information to the readers.

Information Presented to the Panel

The information presented to the panel, as well as the format in which it is presented, is largely determined by the study aim and context. Researchers should provide the rationale for their choice of information used in the panel diagnosis, including references to existing guidelines, systematic reviews, and key papers on the diagnosis of the condition of interest. This will enhance the credibility (face validity) of their results.

A paper-based summary, containing the relevant patient information and test results, is considered the standard way of presenting. However, for certain tests, providing the “raw data,” such as 3D images in the case of complex bone fractures, should be considered. The credibility of final diagnosis can be improved by including follow-up information in the panel diagnosis. A drawback of including this information is a higher chance of missing data on follow-up and heterogeneity in additional diagnostic tests during follow-up, which will often not be random and may introduce verification bias [94].

Decision Process

A disease can be classified as present or absent or can be rated using ordered categories to represent severity or certainty of diagnosis. Recording additional information on the certainty of the final diagnosis enables the researchers to perform additional analyses on the robustness of findings. Subsequent analysis could take the certainty of the final diagnosis into account, for instance by performing a weighted analysis.

The decision process itself is complex and several choices have to be made. The most commonly used options for this process are visualized in Figure S1. Individual assessment can be used to allow the panel members to read the information alone and make a preliminary diagnosis before discussion with other panel members. Also, this individual assessment can be used to define subgroups of patients that do not require evaluation by the entire panel, such as those who receive the same preliminary diagnosis from all panel members. Withholding these participants from the plenary discussions decreases the total workload for the panel members. Such subgroups can also be identified through application of a pre-defined decision rule. For example, a pre-defined combination of test results can clearly rule in or rule out disease in some patients, while the other patients need panel evaluation to determine the final diagnosis. In the plenary process, members influence each other which can either be beneficial or harmful [93]. Finally, the proportion of cases of disagreements should be reported, and the way the panel resolved the disagreement. More research is needed to determine if a plenary decision process is superior to an individual process, or vice versa. Procedures for resolving remaining disagreements are needed and should be formally decided upon at the beginning of the study.

Validity of Panel Diagnosis

Although not frequently performed, the reproducibility of a panel diagnosis is easy to assess. Inter-rater agreement can be calculated in studies with individual assessment results. For the plenary decision process, reproducibility can be determined by reassessing a sample of the patients (obviously with the panel remaining blinded to their first judgment) and comparing the agreement. By comparing the panel diagnosis to clinical follow-up or another reference standard, insights in the validity of the panel diagnosis can be gained.

One of the authors of the included papers [62] stated that “it must be recognized that such diagnostic strategy may not be optimal. Expert opinion can be subjective and erroneous; this could lead to an overestimation or underestimation of the validity of all diagnostic methods in this study.” However, in the absence of a single gold reference test, panel diagnosis is a respected method to provide a solution. In a panel diagnosis, the tests are evaluated by multiple clinicians, and previous literature suggests that test evaluation by multiple clinicians leads to more accurate interpretation of index test results than evaluation by a single clinician [95],[96], accordingly suggesting that panel diagnosis is an acceptable method for diagnosis when a single gold standard is lacking [1],[6]. One of the included papers [71] reported “a great strength of the current study was its use of a structured consensus panel to determine a reference standard for each subject, without relying on a single test treated as the gold standard.” An advantage of panel diagnosis as opposed to composite reference standard or latent class analyses is the flexibility in the interpretation of the test results; each test result is interpreted in the context of all other information. This closely resembles clinical practice and therefore could lead to clinically relevant diagnoses [6],[7].

However, the use of panel diagnosis as reference standard also has disadvantages. The panel diagnosis approach is time and labor intensive. Also, the process is inherently more subjective and therefore results might be less reproducible than for other methods to deal with imperfect reference standards such as composite reference standard or latent class analyses. To quantify this problem, researchers could test the reproducibility of the decision process between panel members and across patients as a measure of the actual subjectivity of the panel diagnosis in the study.

Incorporation bias can be a serious threat to diagnostic studies. It refers to the situation where the results of the diagnostic tests under study (index test) are formally used when making the final diagnosis [6]. In cases of a panel diagnosis this occurs when the results of the test under study are part of the information available to the experts making the consensus diagnosis. The danger is that the results of the tests under evaluation receive too much weight in the decision-making process, leading to an overestimation of the accuracy of that test [6],[97],[98]. However, avoiding incorporation bias by withholding the index test results may in itself increase the risk of misclassification. One way to document the impact of the index test is to use staged unblinding in which the panel first classifies the disease status on the basis of all relevant information except the test under evaluation and again after revealing the index test results [6].

Alternative methods to deal with the absence of a single gold standard are composite reference standard [3] or latent class analyses [4],[5]. In composite reference standard, multiple test results are combined according to a pre-specified algorithm to rule the target disease in or out. These decision rules provide, like panel diagnoses, clinically interpretable diagnoses, but unlike the panel, the decision process is transparent and the same for all patients. Downsides of such decision rule is the limited number and types of tests that can be incorporated for decision making. Latent class analysis is a statistical method in which the probability of the disease status is modeled on the basis of the index tests and information available. However, the results are difficult to interpret clinically as the disease state is expressed in probabilities, rather than in a dichotomized (present or absent) fashion [4].

To our knowledge, this is the first systematic review on the methods applied in diagnostic studies using a panel diagnosis as the reference standard. Identification of studies using panel diagnosis through electronic searching was probably hampered by the fact that not all studies using this method report having done so in the abstract. Therefore, it is likely that we missed some studies. This, however, is unlikely to have had a meaningful impact on our findings about incomplete reporting and the variation present in the methodology of panel diagnoses. We have likely missed some additional papers because we have only searched a single electronic database (PubMed). However, we believe that completeness of the search was not the major issue for answering our research question, because the focus of our paper is on the method of panel diagnosis. To address this methodological issue, a comprehensive set of papers is likely to contain the relevant variations of the methodology of interest. This is very different from systematic reviews about the effectiveness of interventions, where the main aim is to validly estimate the weighted mean from all available studies in literature. A more extensive search might have identified some additional papers, but is unlikely to add relevant variations in the methodology already represented in the initial search. This phenomenon is known as theoretical saturation [9]. Moreover, each study identified within our search was carefully examined for the methods used in the panel diagnosis approach and the quality of reporting on these methods. As a result, a thorough search of Medline—the largest database of medical papers—will likely identify a sufficient number of papers reflecting all methods applied in panel diagnosis.

In conclusion, an expert panel diagnosis may be applied in diagnostic studies when a single gold reference standard is absent or not feasible and its use appears to be increasing in the medical literature. Our review revealed a large variation in applied methods as well as major deficiencies in the reporting of key features of the panel diagnosis process. To improve awareness about possible options when designing a diagnostic study with a panel diagnosis and how to report such studies, we provided some initial guidance highlighting key options in the methodology of panel diagnosis. The results of our review may serve as a starting point in the development of formal guidelines on methodology and reporting of panel diagnosis.

Supporting Information

Zdroje

1. ReitsmaJB, RutjesAW, KhanKS, CoomarasamyA, BossuytPM (2009) A review of solutions for diagnostic accuracy studies with an imperfect or missing reference standard. J Clin Epidemiol 62 : 797–806.

2. HadguA, DendukuriN, HildenJ (2005) Evaluation of nucleic acid amplification tests in the absence of a perfect gold-standard test: a review of the statistical and epidemiologic issues. Epidemiology 16 : 604–612.

3. AlonzoTA, PepeMS (1999) Using a combination of reference tests to assess the accuracy of a new diagnostic test. Stat Med 18 : 2987–3003.

4. PepeMS, JanesH (2007) Insights into latent class analysis of diagnostic test performance. Biostatistics 8 : 474–484.

5. BaughmanAL, BisgardKM, CorteseMM, ThompsonWW, SandenGN, et al. (2008) Utility of composite reference standards and latent class analysis in evaluating the clinical accuracy of diagnostic tests for pertussis. Clin Vaccine Immunol 15 : 106–114.

6. MoonsKG, GrobbeeDE (2002) When should we remain blind and when should our eyes remain open in diagnostic studies? J Clin Epidemiol 55 : 633–636.

7. MagazinerJ, ZimmermanSI, GermanPS, KuhnK, MayC, et al. (1996) Ascertaining dementia by expert panel in epidemiologic studies of nursing home residents. Ann Epidemiol 6 : 431–437.

8. LiberatiA, AltmanDG, TetzlaffJ, MulrowC, GotzschePC, et al. (2009) The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med 6: e1000100 doi:10.1371/journal.pmed.1000100

9. LilfordRJ, RichardsonA, StevensA, FitzpatrickR, EdwardsS, et al. (2001) Issues in methodological research: perspectives from researchers and commissioners. Health Technol Assess 2001 5 : 1–57.

10. BossuytPM, ReitsmaJB, BrunsDE, GatsonisCA, GlasziouPP, et al. (2003) The STARD statement for reporting studies of diagnostic accuracy: explanation and elaboration. Ann Intern Med 138: W1–W12.

11. WhitingPF, RutjesAW, WestwoodME, MallettS, Deeks JJJB, et al. (2011) QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med 155 : 529–536.

12. BairdG, SimonoffE, PicklesA, ChandlerS, LoucasT, et al. (2006) Prevalence of disorders of the autism spectrum in a population cohort of children in South Thames: the Special Needs and Autism Project (SNAP). Lancet 368 : 210–215.

13. BienvenuOJ, SamuelsJF, RiddleMA, Hoehn-SaricR, LiangKY, et al. (2000) The relationship of obsessive-compulsive disorder to possible spectrum disorders: results from a family study. Biol Psychiatry 48 : 287–293.

14. BoustaniM, CallahanCM, UnverzagtFW, AustromMG, PerkinsAJ, et al. (2005) Implementing a screening and diagnosis program for dementia in primary care. J Gen Intern Med 20 : 572–577.

15. BrughaTS, McManusS, SmithJ, ScottFJ, MeltzerH, et al. (2012) Validating two survey methods for identifying cases of autism spectrum disorder among adults in the community. Psychol Med 42 : 647–656.

16. Carnero-PardoC, Espejo-MartinezB, Lopez-AlcaldeS, Espinosa-GarciaM, Saez-ZeaC, et al. (2011) Diagnostic accuracy, effectiveness and cost for cognitive impairment and dementia screening of three short cognitive tests applicable to illiterates. PLoS One 6: e27069 doi:10.1371/journal.pone.0027069

17. ClassCA, UnverzagtFW, GaoS, HallKS, BaiyewaO, et al. (1996) Psychiatric disorders in African American nursing home residents. Am J Psychiatry 153 : 677–681.

18. de KoningHJ, de Ridder-SluiterJG, van AgtHM, Reep-van den BerghCM, van der StegeHA, et al. (2004) A cluster-randomised trial of screening for language disorders in toddlers. J Med Screen 11 : 109–116.

19. DrakeRE, OsherFC, NoordsyDL, HurlbutSC, TeagueGB, et al. (1990) Diagnosis of alcohol use disorders in schizophrenia. Schizophr Bull 16 : 57–67.

20. DubersteinPR, MaY, ChapmanBP, ConwellY, McGriffJ, et al. (2011) Detection of depression in older adults by family and friends: distinguishing mood disorder signals from the noise of personality and everyday life. Int Psychogeriatr 23 : 634–643.

21. FennigS, CraigTJ, Tanenberg-KarantM, BrometEJ (1994) Comparison of facility and research diagnoses in first-admission psychotic patients. Am J Psychiatry 151 : 1423–1429.

22. FladbyT, SchusterM, GronliO, SjoholmH, LosethS, et al. (1999) Organic brain disease in psychogeriatric patients: impact of symptoms and screening methods on the diagnostic process. J Geriatr Psychiatry Neurol 12 : 16–20.

23. GirardC, SimardM, NoiseuxR, LaplanteL, DugasM, et al. (2011) Late-onset-psychosis: cognition. Int Psychogeriatr 23 : 1301–1316.

24. Graff-RadfordNR, FermanTJ, LucasJA, JohnsonHK, ParfittFC, et al. (2006) A cost effective method of identifying and recruiting persons over 80 free of dementia or mild cognitive impairment. Alzheimer Dis Assoc Disord 20 : 101–104.

25. GulevichSJ, ConwellTD, LaneJ, LockwoodB, SchwettmannRS, et al. (1997) Stress infrared telethermography is useful in the diagnosis of complex regional pain syndrome, type I (formerly reflex sympathetic dystrophy). Clin J Pain 13 : 50–59.

26. HallKS, GaoS, BaiyewuO, LaneKA, GurejeO, et al. (2009) Prevalence rates for dementia and Alzheimer's disease in African Americans: 1992 versus 2001. Alzheimers Dement 5 : 227–233.

27. JohnsonS, HollisC, HennessyE, KochharP, WolkeD, et al. (2011) Screening for autism in preterm children: diagnostic utility of the Social Communication Questionnaire. Arch Dis Child 96 : 73–77.

28. LaurilaJV, PitkalaKH, StrandbergTE, TilvisRS (2004) Delirium among patients with and without dementia: does the diagnosis according to the DSM-IV differ from the previous classifications? Int J Geriatr Psychiatry 19 : 271–277.

29. MagazinerJ, GermanP, ZimmermanSI, HebelJR, BurtonL, et al. (2000) The prevalence of dementia in a statewide sample of new nursing home admissions aged 65 and older: diagnosis by expert panel. Epidemiology of Dementia in Nursing Homes Research Group. Gerontologist 40 : 663–672.

30. MillerPR, DasherR, CollinsR, GriffithsP, BrownF (2001) Inpatient diagnostic assessments: 1. Accuracy of structured vs. unstructured interviews. Psychiatry Res 105 : 255–264.

31. OgunniyiA, DaifAK, Al-RajehS, AbdulJabbarM, Al-TahanAR, et al. (1998) Dementia in Saudi Arabia: experience from a university hospital. Acta Neurol Scand 98 : 116–120.

32. OgunniyiA, LaneKA, BaiyewuO, GaoS, GurejeO, et al. (2011) Hypertension and incident dementia in community-dwelling elderly Yoruba Nigerians. Acta Neurol Scand 124 : 396–402.

33. PlassmanBL, LangaKM, FisherGG, HeeringaSG, WeirDR, et al. (2007) Prevalence of dementia in the United States: the aging, demographics, and memory study. Neuroepidemiology 29 : 125–132.

34. PlassmanBL, LangaKM, McCammonRJ, FisherGG, PotterGG, et al. (2011) Incidence of dementia and cognitive impairment, not dementia in the United States. Ann Neurol 70 : 418–426.

35. PotterGG, PlassmanBL, BurkeJR, KabetoMU, LangaKM, et al. (2009) Cognitive performance and informant reports in the diagnosis of cognitive impairment and dementia in African Americans and whites. Alzheimers Dement 5 : 445–453.

36. SachdevPS, BrodatyH, ValenzuelaMJ, LorentzL, LooiJC, et al. (2006) Clinical determinants of dementia and mild cognitive impairment following ischaemic stroke: the Sydney Stroke Study. Dement Geriatr Cogn Disord 21 : 275–283.

37. SteenlandNK, AumanCM, PatelPM, BartellSM, GoldsteinFC, et al. (2008) Development of a rapid screening instrument for mild cognitive impairment and undiagnosed dementia. J Alzheimers Dis 15 : 419–427.

38. Tanenberg-KarantM, FennigS, RamR, KrishnaJ, JandorfL, et al. (1995) Bizarre delusions and first-rank symptoms in a first-admission sample: a preliminary analysis of prevalence and correlates. Compr Psychiatry 36 : 428–434.

39. WeintraubD, RaskinA, RuskinPE, Gruber-BaldiniAL, ZimmermanSI, et al. (2000) Racial differences in the prevalence of dementia among patients admitted to nursing homes. Psychiatr Serv 51 : 1259–1264.

40. WienerP, AlexopoulosGS, KakumaT, MeyersBS, RosenthalE, et al. (1997) The limits of history-taking in geriatric depression. Am J Geriatr Psychiatry 5 : 116–125.

41. WilliamsJ, ScottF, StottC, AllisonC, BoltonP, et al. (2005) The CAST (Childhood Asperger Syndrome Test: test accuracy. Autism 9 : 45–68.

42. AssomullRG, ShakespeareC, KalraPR, LloydG, GulatiA, et al. (2011) Role of cardiovascular magnetic resonance as a gatekeeper to invasive coronary angiography in patients presenting with heart failure of unknown etiology. Circulation 124 : 1351–1360.

43. BosnerS, HaasenritterJ, BeckerA, KaratoliosK, VaucherP, et al. (2010) Ruling out coronary artery disease in primary care: development and validation of a simple prediction rule. CMAJ 182 : 1295–1300.

44. DaoQ, KrishnaswamyP, KazanegraR, HarrisonA, AmirnovinR, et al. (2001) Utility of B-type natriuretic peptide in the diagnosis of congestive heart failure in an urgent-care setting. J Am Coll Cardiol 37 : 379–385.

45. DoubalFN, DennisMS, WardlawJM (2011) Characteristics of patients with minor ischaemic strokes and negative MRI: a cross-sectional study. J Neurol Neurosurg Psychiatry 82 : 540–542.

46. GaikwadAB, MudalgiBA, PatankarKB, PatilJK, GhongadeDV (2008) Diagnostic role of 64-slice multidetector row CT scan and CT venogram in cases of cerebral venous thrombosis. Emerg Radiol 15 : 325–333.

47. HoffmannR, BorgesAC, KasprzakJD, vonBS, FirschkeC, et al. (2007) Analysis of myocardial perfusion or myocardial function for detection of regional myocardial abnormalities. An echocardiographic multicenter comparison study using myocardial contrast echocardiography and 2D echocardiography. Eur J Echocardiogr 8 : 438–448.

48. HoffmannR, vonBS, KasprzakJD, BorgesAC, tenCF, et al. (2006) Analysis of regional left ventricular function by cineventriculography, cardiac magnetic resonance imaging, and unenhanced and contrast-enhanced echocardiography: a multicenter comparison of methods. J Am Coll Cardiol 47 : 121–128.

49. HoffmannU, NagurneyJT, MoselewskiF, PenaA, FerencikM, et al. (2006) Coronary multidetector computed tomography in the assessment of patients with acute chest pain. Circulation 114 : 2251–2260.

50. HoffmannU, PenaAJ, MoselewskiF, FerencikM, AbbaraS, et al. (2006) MDCT in early triage of patients with acute chest pain. Am J Roentgenol 187 : 1240–1247.

51. KantarciM, CevizN, SevimliS, BayraktutanU, CeyhanE, et al. (2007) Diagnostic performance of multidetector computed tomography for detecting aorto-ostial lesions compared with catheter coronary angiography: multidetector computed tomography coronary angiography is superior to catheter angiography in detection of aorto-ostial lesions. J Comput Assist Tomogr 31 : 595–599.

52. KelderJC, CramerMJ, VerweijWM, GrobbeeDE, HoesAW (2011) Clinical utility of three B-type natriuretic peptide assays for the initial diagnostic assessment of new slow-onset heart failure. J Card Fail 17 : 729–734.

53. KelderJC, CramerMJ, RuttenFH, PlokkerHW, GrobbeeDE, et al. (2011) The furosemide diagnostic test in suspected slow-onset heart failure: popular but not useful. Eur J Heart Fail 13 : 513–517.

54. LinnJ, Ertl-WagnerB, SeelosKC, StruppM, ReiserM, et al. (2007) Diagnostic value of multidetector-row CT angiography in the evaluation of thrombosis of the cerebral venous sinuses. AJNR Am J Neuroradiol 28 : 946–952.

55. NordenholzKE, ZieskeM, DyerDS, HansonJA, HeardK (2007) Radiologic diagnoses of patients who received imaging for venous thromboembolism despite negative D-dimer tests. Am J Emerg Med 25 : 1040–1046.

56. OudejansI, MosterdA, BloemenJA, ValkMJ, van VelzenE, et al. (2011) Clinical evaluation of geriatric outpatients with suspected heart failure: value of symptoms, signs, and additional tests. Eur J Heart Fail 13 : 518–527.

57. Remy-JardinM, RemyJ, MassonP, BonnelF, DebatselierP, et al. (2000) CT angiography of thoracic outlet syndrome: evaluation of imaging protocols for the detection of arterial stenosis. J Comput Assist Tomogr 24 : 349–361.

58. TrevelyanJ, NeedhamEW, SmithSC, MattuRK (2003) Sources of diagnostic inaccuracy of conventional versus new diagnostic criteria for myocardial infarction in an unselected UK population with suspected cardiac chest pain, and investigation of independent prognostic variables. Heart 89 : 1406–1410.

59. BroekhuizenBD, SachsAP, HoesAW, MoonsKG, van den BergJW, et al. (2010) Undetected chronic obstructive pulmonary disease and asthma in people over 50 years with persistent cough. Br J Gen Pract 60 : 489–494.

60. BroekhuizenBD, SachsAP, MoonsKG, CheragwandiSA, DamsteHE, et al. (2011) Diagnostic value of oral prednisolone test for chronic obstructive pulmonary disorders. Ann Fam Med 9 : 104–109.

61. ChavannesN, SchermerT, AkkermansR, JacobsJE, van de GraafG, et al. (2004) Impact of spirometry on GPs' diagnostic differentiation and decision-making. Respir Med 98 : 1124–1130.

62. GauvinF, DassaC, ChaibouM, ProulxF, FarrellCA, et al. (2003) Ventilator-associated pneumonia in intubated children: comparison of different diagnostic methods. Pediatr Crit Care Med 4 : 437–443.

63. GuderG, BrennerS, AngermannCE, ErtlG, HeldM, et al. (2012) “GOLD or lower limit of normal definition? A comparison with expert-based diagnosis of chronic obstructive pulmonary disease in a prospective cohort-study”. Respir Res 13 : 13.

64. Mohamed HoeseinFA, ZanenP, SachsAP, VerheijTJ, LammersJW, et al. (2012) Spirometric Thresholds for Diagnosing COPD: 0.70 or LLN, Pre - or Post-dilator Values? COPD 9 : 338–343.

65. ReinartzP, WildbergerJE, SchaeferW, NowakB, MahnkenAH, et al. (2004) Tomographic imaging in the diagnosis of pulmonary embolism: a comparison between V/Q lung scintigraphy in SPECT technique and multislice spiral CT. J Nucl Med 45 : 1501–1508.

66. ReinartzP, KaiserHJ, WildbergerJE, GordjiC, NowakB, et al. (2006) SPECT imaging in the diagnosis of pulmonary embolism: automated detection of match and mismatch defects by means of image-processing techniques. J Nucl Med 47 : 968–973.

67. Szucs-FarkasZ, SchallerC, BenslerS, PatakMA, VockP, et al. (2009) Detection of pulmonary emboli with CT angiography at reduced radiation exposure and contrast material volume: comparison of 80 kVp and 120 kVp protocols in a matched cohort. Invest Radiol 44 : 793–799.

68. ThiemeSF, GrauteV, NikolaouK, MaxienD, ReiserMF, et al. (2012) Dual Energy CT lung perfusion imaging–correlation with SPECT/CT. Eur J Radiol 81 : 360–365.

69. GeirnaerdtMJ, HermansJ, BloemJL, KroonHM, PopeTL, et al. (1997) Usefulness of radiography in differentiating enchondroma from central grade 1 chondrosarcoma. AJR Am J Roentgenol 169 : 1097–1104.

70. JorgensenF, FruergaardP, LaunbjergJ, AggestrupS, ElsborgL, et al. (1998) The diagnostic value of oesophageal radionuclide transit in patients admitted for but without acute myocardial infarction. Clin Physiol 18 : 89–96.

71. MarshallJK, CawdronR, ZealleyI, RiddellRH, SomersS, et al. (2004) Prospective comparison of small bowel meal with pneumocolon versus ileo-colonoscopy for the diagnosis of ileal Crohn's disease. Am J Gastroenterol 99 : 1321–1329.

72. MartinezFJ, StanopoulosI, AceroR, BeckerFS, PickeringR, et al. (1994) Graded comprehensive cardiopulmonary exercise testing in the evaluation of dyspnea unexplained by routine evaluation. Chest 105 : 168–174.

73. RayP, BirolleauS, LefortY, BecqueminMH, BeigelmanC, et al. (2006) Acute respiratory failure in the elderly: etiology, emergency diagnosis and prognosis. Crit Care 10: R82.

74. RuttenFH, CramerMJ, GrobbeeDE, SachsAP, KirkelsJH, et al. (2005) Unrecognized heart failure in elderly patients with stable chronic obstructive pulmonary disease. Eur Heart J 26 : 1887–1894.

75. WhiteCS, KuoD, KelemenM, JainV, MuskA, et al. (2005) Chest pain evaluation in the emergency department: can MDCT provide a comprehensive evaluation? AJR Am J Roentgenol 185 : 533–540.

76. AmourJ, BirenbaumA, LangeronO, LeMY, BertrandM, et al. (2008) Influence of renal dysfunction on the accuracy of procalcitonin for the diagnosis of postoperative infection after vascular surgery. Crit Care Med 36 : 1147–1154.

77. BisulliF, VignatelliL, NaldiI, PittauF, ProviniF, et al. (2012) Diagnostic accuracy of a structured interview for nocturnal frontal lobe epilepsy (SINFLE: a proposal for developing diagnostic criteria. Sleep Med 13 : 81–87.

78. Gamez-DiazLY, EnriquezLE, MatuteJD, VelasquezS, GomezID, et al. (2011) Diagnostic accuracy of HMGB-1, sTREM-1, and CD64 as markers of sepsis in patients recently admitted to the emergency department. Acad Emerg Med 18 : 807–815.

79. HamH, McInnesMD, WooM, LemondeS (2012) Negative predictive value of intravenous contrast-enhanced CT of the abdomen for patients presenting to the emergency department with undifferentiated upper abdominal pain. Emerg Radiol 191 : 19–26.

80. HardieAD, NaikM, HechtEM, ChandaranaH, MannelliL, et al. (2010) Diagnosis of liver metastases: value of diffusion-weighted MRI compared with gadolinium-enhanced MRI. Eur Radiol 20 : 1431–1441.

81. HumphriesPD, SimpsonJC, CreightonSM, Hall-CraggsMA (2008) MRI in the assessment of congenital vaginal anomalies. Clin Radiol 63 : 442–448.

82. LinWC, UppotRN, LiCS, HahnPF, SahaniDV (2007) Value of automated coronal reformations from 64-section multidetector row computerized tomography in the diagnosis of urinary stone disease. J Urol 178 : 907–911.

83. O'TooleRV, CoxG, ShanmuganathanK, CastilloRC, TurenCH, et al. (2010) Evaluation of computed tomography for determining the diagnosis of acetabular fractures. J Orthop Trauma 24 : 284–290.

84. OtteJA, GeelkerkenRH, OostveenE, MensinkPB, HuismanAB, et al. (2005) Clinical impact of gastric exercise tonometry on diagnosis and management of chronic gastrointestinal ischemia. Clin Gastroenterol Hepatol 3 : 660–666.

85. PenzkoferAK, PflugerT, PochmannY, MeissnerO, LeinsingerG (2002) MR imaging of the brain in pediatric patients: diagnostic value of HASTE sequences. AJR Am J Roentgenol 179 : 509–514.

86. RobinTA, MullerA, RaitJ, KeeffeJE, TaylorHR, et al. (2005) Performance of community-based glaucoma screening using Frequency Doubling Technology and Heidelberg Retinal Tomography. Ophthalmic Epidemiol 12 : 167–178.

87. TadrosAM, LunsjoK, CzechowskiJ, CorrP, bu-ZidanFM (2007) Usefulness of different imaging modalities in the assessment of scapular fractures caused by blunt trauma. Acta Radiol 48 : 71–75.

88. TepperSJ, DahlofCG, DowsonA, NewmanL, MansbachH, et al. (2004) Prevalence and diagnosis of migraine in patients consulting their physician with a complaint of headache: data from the Landmark Study. Headache 44 : 856–864.

89. ThabutD, D'AmicoG, TanP, De FrancisR, FabriciusS, et al. (2010) Diagnostic performance of Baveno IV criteria in cirrhotic patients with upper gastrointestinal bleeding: analysis of the F7 liver-1288 study population. J Hepatol 53 : 1029–1034.

90. van RandenA, LamerisW, van EsHW, van HeesewijkHP, van RamhorstB, et al. (2011) A comparison of the accuracy of ultrasound and computed tomography in common diagnoses causing acute abdominal pain. Eur Radiol 21 : 1535–1545.

91. WeihLM, NanjanM, McCartyCA, TaylorHR (2001) Prevalence and predictors of open-angle glaucoma: results from the visual impairment project. Ophthalmology 108 : 1966–1972.

92. WhiteleyWN, WardlawJM, DennisMS, SandercockPA (2011) Clinical scores for the identification of stroke and transient ischaemic attack in the emergency department: a cross-sectional study. J Neurol Neurosurg Psychiatry 82 : 1006–1010.

93. GabelMJ, ShipanCR (2004) A social choice approach to expert consensus panels. J Health Econ 23 : 543–564.

94. de GrootJA, BossuytPM, ReitsmaJB, RutjesAW, DendukuriN, et al. (2011) Verification problems in diagnostic accuracy studies: consequences and solutions. BMJ 343: d4770.

95. BankierAA, LevineD, HalpernEF, KresselHY (2010) Consensus interpretation in imaging research: is there a better way? Radiology 257 : 14–17.

96. ObuchowskiNA, ZeppRC (1996) Simple steps for improving multiple-reader studies in radiology. AJR Am J Roentgenol 166 : 517–521.

97. RansohoffDF, FeinsteinAR (1978) Problems of spectrum and bias in evaluating the efficacy of diagnostic tests. N Engl J Med 299 : 926–930.

98. RutjesAW, ReitsmaJB, CoomarasamyA, KhanKS, BossuytPM (2007) Evaluation of diagnostic tests when there is no gold standard. A review of methods. Health Technol Assess 11: iii, ix-51.

Štítky

Interní lékařství

Článek vyšel v časopisePLOS Medicine

Nejčtenější tento týden

2013 Číslo 10- Není statin jako statin aneb praktický přehled rozdílů jednotlivých molekul

- Magnosolv a jeho využití v neurologii

- Moje zkušenosti s Magnosolvem podávaným pacientům jako profylaxe migrény a u pacientů s diagnostikovanou spazmofilní tetanií i při normomagnezémii - MUDr. Dana Pecharová, neurolog

- Biomarker NT-proBNP má v praxi široké využití. Usnadněte si jeho vyšetření POCT analyzátorem Afias 1

- S prof. Vladimírem Paličkou o racionální suplementaci kalcia a vitaminu D v každodenní praxi

-

Všechny články tohoto čísla

- Modelling the Strategic Use of Antiretroviral Therapy for the Treatment and Prevention of HIV

- Psychosocial Interventions for Perinatal Common Mental Disorders Delivered by Providers Who Are Not Mental Health Specialists in Low- and Middle-Income Countries: A Systematic Review and Meta-Analysis

- Predicting Patterns of Long-Term CD4 Reconstitution in HIV-Infected Children Starting Antiretroviral Therapy in Sub-Saharan Africa: A Cohort-Based Modelling Study

- Use of Expert Panels to Define the Reference Standard in Diagnostic Research: A Systematic Review of Published Methods and Reporting

- Elimination of HIV in South Africa through Expanded Access to Antiretroviral Therapy: A Model Comparison Study

- A Transcriptional Signature for Active TB: Have We Found the Needle in the Haystack?

- Poor Health in Rich Countries: A Role for Open Access Journals

- The 2003 Iraq War and Avoidable Death Toll

- Completeness of Reporting of Patient-Relevant Clinical Trial Outcomes: Comparison of Unpublished Clinical Study Reports with Publicly Available Data

- The Final Push for Polio Eradication: Addressing the Challenge of Violence in Afghanistan, Pakistan, and Nigeria

- Saving Lives in Health: Global Estimates and Country Measurement

- Complexity in Mathematical Models of Public Health Policies: A Guide for Consumers of Models

- Mortality in Iraq Associated with the 2003–2011 War and Occupation: Findings from a National Cluster Sample Survey by the University Collaborative Iraq Mortality Study

- Why We Must Provide Better Support for Pakistan's Female Frontline Health Workers

- Effect on Postpartum Hemorrhage of Prophylactic Oxytocin (10 IU) by Injection by Community Health Officers in Ghana: A Community-Based, Cluster-Randomized Trial

- The Prevention of Postpartum Hemorrhage in the Community

- Pregnancy Weight Gain and Childhood Body Weight: A Within-Family Comparison

- Detection of Tuberculosis in HIV-Infected and -Uninfected African Adults Using Whole Blood RNA Expression Signatures: A Case-Control Study

- A New Approach to Psychiatric Drug Approval in Europe

- Utility of the Xpert MTB/RIF Assay for Diagnosis of Tuberculous Meningitis

- Methodological and Policy Limitations of Quantifying the Saving of Lives: A Case Study of the Global Fund's Approach

- Diagnostic Accuracy of Quantitative PCR (Xpert MTB/RIF) for Tuberculous Meningitis in a High Burden Setting: A Prospective Study

- Assessing Optimal Target Populations for Influenza Vaccination Programmes: An Evidence Synthesis and Modelling Study

- PLOS Medicine

- Archiv čísel

- Aktuální číslo

- Informace o časopisu

Nejčtenější v tomto čísle- Effect on Postpartum Hemorrhage of Prophylactic Oxytocin (10 IU) by Injection by Community Health Officers in Ghana: A Community-Based, Cluster-Randomized Trial

- Utility of the Xpert MTB/RIF Assay for Diagnosis of Tuberculous Meningitis

- Modelling the Strategic Use of Antiretroviral Therapy for the Treatment and Prevention of HIV

- Diagnostic Accuracy of Quantitative PCR (Xpert MTB/RIF) for Tuberculous Meningitis in a High Burden Setting: A Prospective Study

Kurzy

Zvyšte si kvalifikaci online z pohodlí domova

Autoři: prof. MUDr. Vladimír Palička, CSc., Dr.h.c., doc. MUDr. Václav Vyskočil, Ph.D., MUDr. Petr Kasalický, CSc., MUDr. Jan Rosa, Ing. Pavel Havlík, Ing. Jan Adam, Hana Hejnová, DiS., Jana Křenková

Autoři: MUDr. Irena Krčmová, CSc.

Autoři: MDDr. Eleonóra Ivančová, PhD., MHA

Autoři: prof. MUDr. Eva Kubala Havrdová, DrSc.

Všechny kurzyPřihlášení#ADS_BOTTOM_SCRIPTS#Zapomenuté hesloZadejte e-mailovou adresu, se kterou jste vytvářel(a) účet, budou Vám na ni zaslány informace k nastavení nového hesla.

- Vzdělávání