-

Medical journals

- Career

Compliance with ethical rules for scientific publishing in biomedical Open Access journals indexed in Journal Citation Reports

Authors: Jiří Kratochvíl 1; Lukáš Plch 1; Eva Koriťáková 2

Authors‘ workplace: University Campus Library, Masaryk University Brno 1; Institute of Biostatistics and Analyses, Masaryk University Brno 2

Published in: Vnitř Lék 2019; 65(5): 338-347

Category: Original Contributions

Overview

This study examined compliance with the criteria of transparency and best practice in scholarly publishing defined by COPE, DOAJ, OASPA and WAME in Biomedical Open Access journals indexed in Journal Citation Reports (JCR). 259 Open Access journals were drawn from the JCR database and on the basis of their websites their compliance with 14 criteria for transparency and best practice in scholarly publishing was verified. Journals received penalty points for each unfulfilled criterion when they failed to comply with the criteria defined by COPE, DOAJ, OASPA and WAME. The average number of obtained penalty points was 6, where 149 (57.5%) journals received ≤ 6 points and 110 (42.5%) journals ≥ 7 points. Only 4 journals met all criteria and did not receive any penalty points. Most of the journals did not comply with the criteria declaration of Creative Commons license (164 journals), affiliation of editorial board members (116), unambiguity of article processing charges (115), anti-plagiarism policy (113) and the number of editorial board members from developing countries (99). The research shows that JCR cannot be used as a whitelist of journals that comply with the criteria of transparency and best practice in scholarly publishing.

Keywords:

biomedical journals – ethical rules of scientific publishing – Journal Citation Reports – open access – predatory journals – Web of Science

Introduction

At present, research funding in the European Union from the Horizon 2020 programme and public funds in Australia, Canada, Great Britain, and the United States comes on the condition of publishing the research results openly, either through self-archiving or publishing in Open Access (OA) journals [1,2]. This condition is fulfilled especially by publishing in institutional repositories, and a number of authors publish in OA journals with the aim of improving their professional prestige and citation rates [3]. However, with regard to the current problem of predatory journals [4–6] there is a need to choose such an OA journal which complies with the criteria of transparency and best practice in scholarly publishing (hereinafter “the criteria of best practice”) set by the Committee on Publication Ethics (COPE), Directory of Open Access Journals (DOAJ), Open Access Scholarly Publishers Association (OASPA) and the World Association of Medical Editors (WAME) [7–10]. At present, these are world-renowned authorities referred to by authors and publishers.

The criteria of best practice include ensuring the quality of the review process, a clear determination of article processing charges (APCs), the declaration of OA and Creative Commons license (CC), as well as an international editorial board made up of experts in the respective field, correct information about the journal’s indexing primarily in databases such as Web of Science, Scopus, Medline PubMed and ERIHPLUS and their citation metrics (impact factor, SNIP, SJR, CiteScore), and transparent information about the administration of the given journal (main editor, affiliation of editorial board members, contact information of the main editor or editorial board). Unfortunately, there have been a number of cases when authors did not verify compliance with the criteria of best practice and they published in journals with poor editorial efforts or even in predatory journals, as a consequence of which these authors’ prestige and also the results of their research were questioned [4,6,11].

Despite the need to verify a journal’s compliance with the criteria of best practice [12,13], due to the time demands that such checks impose, it cannot be expected that authors will perform them themselves because of the workload of their own research and associated administration or even teaching duties [6,14]. A recent survey among scientists from Italy showed that despite having doubts about the quality of some periodicals, scientists have sent their manuscript to be published in them [15]. After all, in practice we have also encountered scientists who, instead of consulting on the matter beforehand, submitted an article of theirs to a journal and were unpleasantly surprised by the instructions to pay APCs, and their discovery that the periodical is not in fact indexed in Web of Science, and the Journal Citation Reports (WoS/JCR). Authors therefore rely in particular on the databases WoS/JCR, Scopus, MEDLINE, and DOAJ and consider these so-called whitelists of quality periodicals. They trust journals which claim that they are indexed in some of these databases [16–19]. However, as some studies have shown, all of these databases include journals which do not comply with the criteria of best practice, and their titles appear on Beall’s list of predatory journals. For example, a study from the University of Barcelona identified 39 journals in the database WoS and 56 journals in the Scopus that were on Beall’s list [20]. Macháček and Srholec [21] found by comparing Beall’s list with the content of Scopus that in 2015, the Scopus database listed approximately 60 000 articles (3%) published in journals indexed on Beall’s list. According to the aforementioned study on a survey among Italian scientists, WoS contains 14 journals and Scopus 284 journals that were present on Beall’s list [15]. These findings are problematic despite their importance, because they are not based on the analysis of the journals as such, but rather on a mechanical comparison of the contents of the databases with Beall’s list. His list has been questioned in the past due to, for example, Beall’s alleged bias towards OA journals and his preference for journals from prominent publishing houses, especially Elsevier [22], the controversial inclusion of journals from the publisher Frontiers on his list [23], or the generalized inclusion of journals from developing countries [24].

In order to more accurately detect journals which do not comply with the criteria of best practice in some of the above-mentioned databases, these criteria must be checked directly in the journal. Shamseer et al [12] have recently compared randomly chosen titles from Beall’s list, OA journals from PubMed, and subscription periodicals and found that all three groups contain journals violating some of the criteria of best practice. Not even this study answered the question of whether any of these databases can be used as a whitelist, not even the PubMed database, despite the fact that this database is not subject to such strict rules as MEDLINE [25].

Aims of the study

Under circumstances mentioned above and also considering the fact that scientists use WoS as the most preferred source of information, or more precisely JCR due to allocation of impact factor, this study aims to verify whether biomedical OA journals indexed in these databases follow the criteria of best practice. Similarly as Shamseer et al [12], we also assessed selected criteria such as the average length of the review process, the completeness of information about the editorial board, a clear declaration of the means of OA and Creative Commons (CC) license, etc. The subject of this analysis were biomedical journals because they and their authors are most often targeted by predatory publishers [4, 26]. The aim of this study, however, is not to determine whether some of the OA journals in the JCR are predatory journals, but whether they follow the criteria of best practice set by COPE, DOAJ, OASPA and WAME and also whether authors planning to publish in an OA journal with impact factor can use the contents of JCR as a whitelist.

Methods

Defining the criteria

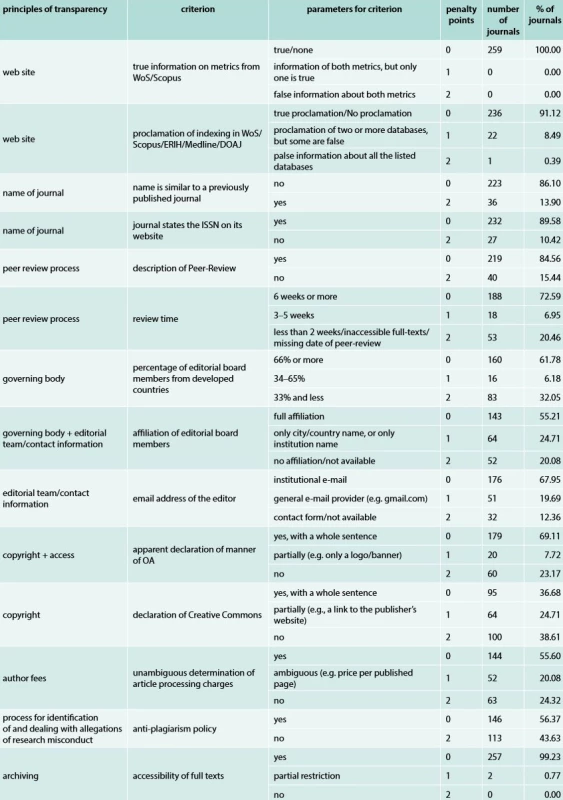

COPE, DOAJ, OASPA and WAME define 16 principles of transparency which should be followed by the publishers and editors of journals [27]. For the purpose of our study, we have taken up these principles and, according to their description defined a total of 14 criteria based on 10 out of 16 principles of transparency (table 1). While defining the criteria we have adhered to the requirements specified in each principle – e. g. the Review Time and Description of Peer-Review criteria were applied while the Peer Review Process Principle (which requires a clear designation of reviewed articles and the course of the peer-review process) was being evaluated. Another principles were excluded for various reasons. Ownership and management information is redundant because the name of a journal publisher is already included in each JCR record. Conflict of interest can be claimed but is unverifiable for us, as the responsibility for avoiding it lies especially with the authors. Advertising is a principle out of our scope because we evaluate criteria strictly related to the publishing process, management, financial model or contacts details and not matters concerning promotion and advertisement. Periodicity is questionable due to current trend of immediately publishing articles instead of binding them to issues (e.g. BMC journals). And direct marketing is unverifiable because we have no access to information sent to contractors. The 14 mentioned criteria are rated on a scale 0–1–2 and 0–2. 0 points were assigned for the fulfilment of the criterion and 1 or 2 points as a penalty for partial or complete non-compliance with the criterion. In the event that it violated criteria of best practice, a journal could gain a maximum of 28 penalty points.

1. Summary of criteria with the amount of penalty points and the number of journals which obtained them

Besides a criterion “Name is similar to a previously published journal” a criterion “Journal states the ISSN on its website“ is also used although according to Harzing and Adler [16] the use of an ISSN does not guarantee the quality of a journal because an ISSN may be easily assigned. An ISSN is important for unambiguously identifying a journal (as proven by the cases of two journals with an identical name – e.g. a Japanese journal Biomedical Research with pISSN 0388–6107 and eISSN 1880–313X vs a British journal Biomedical Research with pISSN 0970–938X and eISSN 0976–1683).

Other important criteria are citation metrics (“True information on metrics from WoS/Scopus”) and a journal’s indexing in the Web of Science and Scopus (“Proclamation of indexing in WoS/Scopus/ERIH/Medline/DOAJ”), which motivate authors to publish in journals included in these databases for reasons of professional prestige and keeping records of citation impact [3]. The length of peer-review (“Review time”) also helps determine the quality of the journal, i.e. an unusually quick peer-review process casts doubt on the quality of the journal [11,28]. A predominance of editorial board members from developing countries (“Percentage of editorial board members from developed countries”) can, according to the OECD, be a cause of concern because of the possible low level of their expertise due to the lower economic and technological level of development of these countries [5,16,19,29,30].

Compliance with the criteria was first examined on the journal’s respective website and if not found there on the various pages therein (e.g. if an e-mail address was not found on the page “Contact”, other pages such as “About us”, “Author Guidelines”, etc. were checked). A special approach was required only by the two criteria of length of peer-review and the similarity of the journals’ names. The length of peer-review was determined according to the time between the received date and the accepted date in 10 randomly chosen articles in the current issue of a journal, while the common 6-week period of peer-review [28] was divided equally and evaluated according to the scale 0–1–2 (0 = 6 weeks or more, 1 = 3–5 weeks, 2 = 2 weeks or less). When verifying the criterion “Name is similar to a previously published journal”, penalty points were assigned to the journal when, after entering the name of the examined journal into Google, a journal with a similar name appeared on the first page. This approach was chosen with regard to the common practice that a user would primarily search via the most widely used search engine.

Journals selection

When preparing a list of OA journals for analysis, on March 8, 2017 we exported from JCR a list of 4 428 journals that are assigned to one of the biomedical disciplines or a related field, such as biochemistry, biophysics, biotechnology, etc. We compared this list according to ISSN with the content of the database Directory of Open Access Scholarly Resources (ROAD) administered by the International Centre for ISSN and UNESCO [31]. In this way we identified OA journals, because OA journals in the JCR do not always have the attribute of OA stated correctly. The original list of JCR was thus reduced to 620 OA journals from which we excluded journals marked with SEAL (130 journals) and a green tick (215) based on the comparison with the database DOAJ [32]. These labels were given by the administrators of DOAJ to journals complying with the criteria of best practice. DOAJ is considered by organizations such as OASPA and WAME to be a trustworthy database of OA journals [33,34].

In this way we created a list of 275 journals, on the websites of which we manually verified the information relevant for the established criteria (table 1) in the period between March 26 and May 24, 2017. Later between December 11 and 13, 2017, the description of the peer-review process and the anti-plagiarism policy were examined. Unlike the aforementioned studies [12,15,20,21], which compared the content of databases with Beall’s list, we verified compliance with the individual criteria of best practice manually. Due to the time-consuming nature of the analysis (20–30 minute per journal), the individual journals were checked by one librarian while another librarian subsequently verified journals where breach of criteria was ascertained. It was only necessary to correct the following criteria: True information on metrics from WoS/Scopus (1 correction), Anti-plagiarism policy (8 corrections) and Description of peer-review process (5 corrections). Findings from the websites of the journals were recorded as points in Google Forms for easier recording during the inspection, so that the state of the journals’ websites may be traced back to the time of their inspection in the Internet Archive by those interested (appendix A [47]). Based on data from JCR, we added to the resulting table for each journal information about its publisher for the purpose of finding publishers adhering to the criteria of best practice. In order to better illustrate the obtained penalty points by the well-known publishers, we created a visualization of the journals using a scatter plot with the use of the statistical software R version 3.4.1.

Results

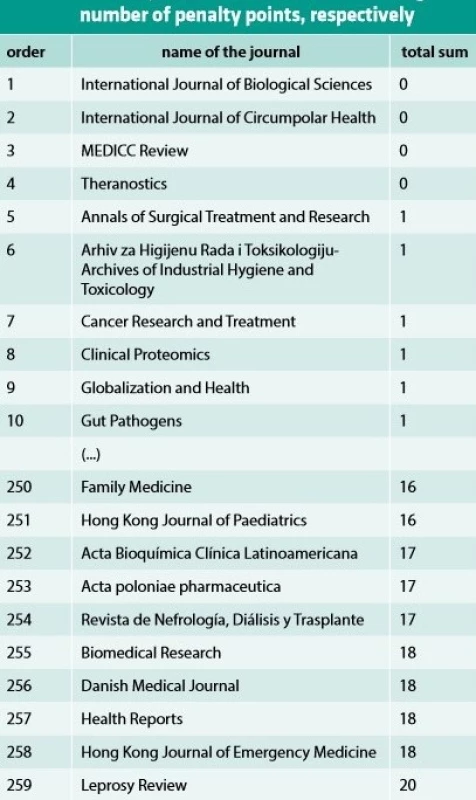

An analysis of 259 journals out of 275 was performed due to the fact that from the remaining 16 journals, 13 were not in fact OA journals and 3 had ceased publication. From the 259 analyzed journals, only the journals International Journal of Biological Sciences, International Journal of Circumpolar Health, MEDICC Review and Theranostics met all the criteria, while the journal Leprosy Review (table 2) received the most penalty points (20). The average number of penalty points given to journals was 6, where 149 (57.5%) journals received 6 points or less and 110 (42.5%) journals 7 or more points. 246 (95.0%) journals received less than half the possible penalty points (154 and less out of 28), while 13 (5.0%) journals received 15 or more points from the possible maximum of 28 points.

2. The ten journals with the lowest and highest number of penalty points, respectively

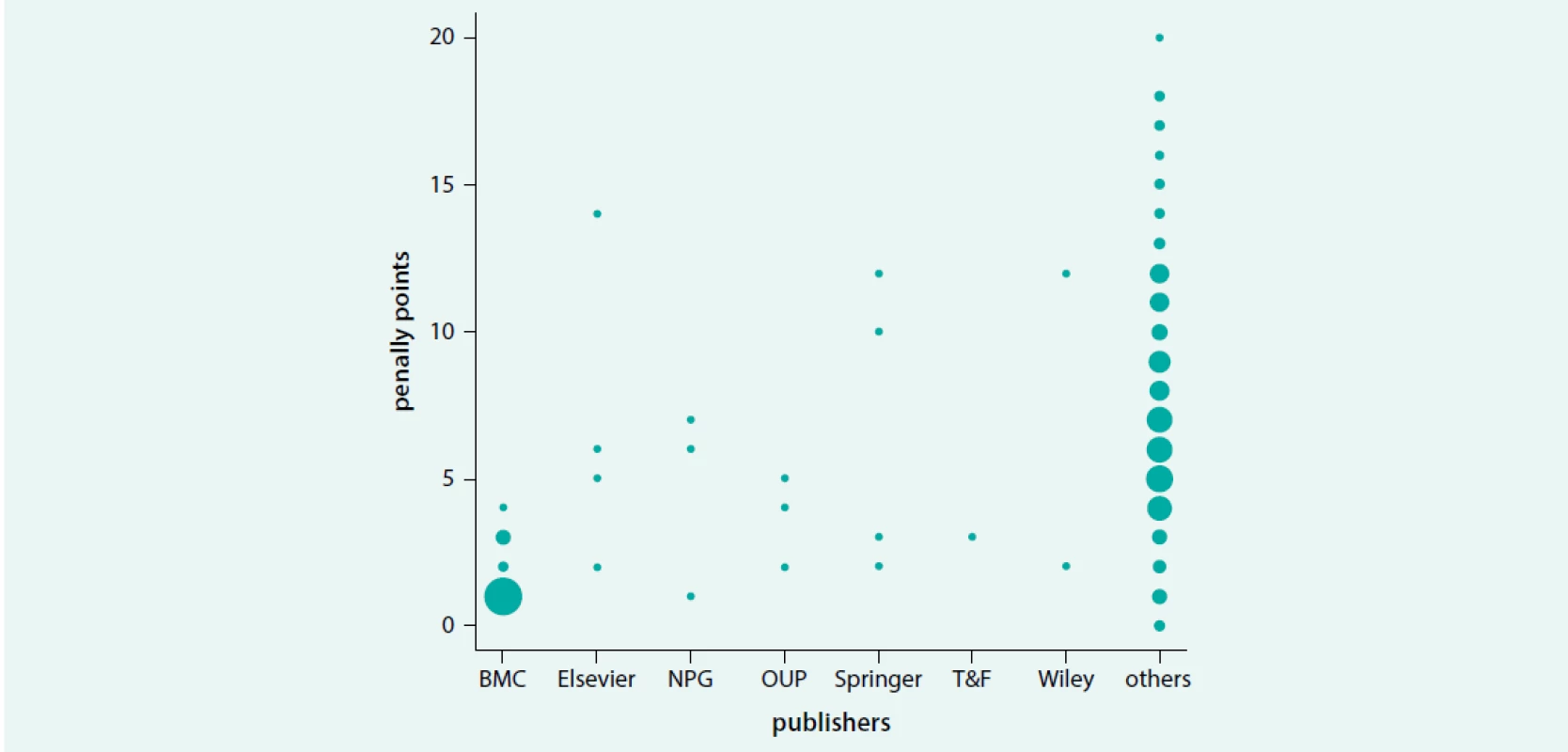

An overview of achieved penalty points in journals from the well-known publishers compared to other publishers is shown in figure 1. The figure indicates that none of the journals from the well-known publishers achieved more than half of the possible penalty points. However, four journals achieved more than average number of penalty points; specifically one journal from Elsevier (Bulletin de L’Académie Nationale de Médecine – 14 points), two journals from Springer (AAPS Journal – 12 points, Indian Pediatrics – 10 points), and one journal from Wiley (World Psychiatry – 12 points). The best result was obtained by BMC as most of the journals from this publisher (33 out of 46) had only 1 penalty point and 13 other journals had 4 or less penalty points.

1. Overview of penalty points obtained by journals from the well-known publishers. The size of the dots corresponds to the number of journals with the achieved penalty point

NPG – Nature Publishing Group OUP – Oxford University Press T&F – Taylor & Francis Most of the journals did not comply with the following criteria: declaration of CC (164 journals, 63.3%), complete affiliation of editorial board members (116, 44.8%), unambiguously stated APCs (115, 44.4%), anti-plagiarism policy (113, 43.6%) and prevalence of editorial board members from developed countries (99, 38.2%). Although OA journals were analyzed, 60 (23.2%) did not clearly stated their OA publishing model. Two journals (Noise & Health and Acta Endocrinologica) did not offer complete access to full texts. Noise & Health offers articles in HTML format for free, but access to the PDF format requires paying a per-article fee of $ 20. Moreover, when checking the length of peer-review a non-functional link to the article “Effect of Filters on the Noise Generated by Continuous Positive Airway Pressure Delivered via a Helmet” (2017, vol. 9, iss. 86) was found. Acta Endocrinologica makes articles accessible only to registered users who have previously registered on the journal’s website for free.

Ninety-five (36.7%) journals declared the CC license terms on their websites, while 64 (24.7%) journals stated the license terms on the website of their publisher and 100 (38.6%) did not state license terms at all. An anti-plagiarism policy was stated by 146 (56.4%) journals either as a declaration that delivered manuscripts are checked with plagiarism detection software, by adhering to the criteria of best practice set by COPE or WAME, or at least by a commitment to reject plagiarized documents. By contrast, 113 (43.6%) journals did not state any anti-plagiarism policy.

The contact information of the editor-in-chief was stated by 176 (68.0%) journals, while 51 (19.7%) titles provided only the contact information of the publisher and 32 (12.4%) journals do not provide any contact information. With regard to the affiliation of the editorial board members, 143 (55.2%) journals provided complete data, while 64 (24.7%) gave only the name of the institution or city/country, and 52 (20.1%) state no such information. Almost a third of the journals (83) had 33% or less people from developed countries in their editorial boards [30]. Moreover, these journals violated the criteria of best practice more often, because, on average, they received 10 penalty points, while journals with 66% or more members from developed countries received on average 4 penalty points. This was similar with 52 (20.1%) journals with editorial boards, whose members or at least half of them had no affiliation mentioned (15 without editorial board, 33 with members without affiliation and 4 with at least half of the members without affiliation). Journals stating the full affiliation of their editorial board members had on average 4 penalty points, while the above-mentioned 52 journals with members without affiliation received 11.

Article processing charges were clearly stated by 144 (55.6%) journals, 40 of which were free of charge and 104 had an average fee of $ 1,626. On the other hand, 63 (24.3%) journals did not provide any information on APCs and 52 (20.1%) titles did not state APCs unambiguously. Journals violating this criterion also did not comply with other criteria, because they received on average 10 penalty points, while periodicals with clearly stated APCs had only 4 penalty points.

A description of peer-review was not given by 40 (15.4%) journals, while the rest of the journals provided at least a brief wording that articles sent to the editors undergo a double-blind peer review. In 53 (20.5%) journals the length of peer-review was shorter than 2 weeks, or it could not be verified due to unstated or incomplete dates of peer-review.

When examining the similarity of journals’ titles, it was found that 36 (13.9%) journals had a name similar to another one, with the name differing by a change or addition of one or two words. For example, the names World Journal of Surgical Oncology and Cell Journal resemble the European Journal of Surgical Oncology and Cell from the publisher Elsevier, while the Journal of Internal Medicine and Journal of Neurogastroenterology and Motility are similar to Internal Medicine and Neurogastroenterology and Motility from the publisher Wiley. Besides the title of the journal, the stating of an ISSN was also examined as an identifier; 27 (10.4%) journals did not provide their ISSN anywhere on their websites.

Regarding the next criterion, proclamation of indexing in databases, out of 236 journals 203 correctly stated their indexing in WoS/JCR, 161 in Scopus, 124 in MEDLINE and 105 in DOAJ. From the remaining 23 journals, 20 periodicals falsely stated their indexing in DOAJ, 2 in MEDLINE and 1 in both of these databases. As regards the citation metrics, none of the journals provided false information about the metrics used in JCR and Scopus, but 37 titles stated Index Copernicus metrics.

Discussion

Like previous studies [12,15,20,21], also the results of our analysis confirmed that JCR includes OA journals which do not comply with some of the criteria of best practice in scholarly publishing. The results showed that a significant majority of the journals received less than half of the possible penalty points, but also that – with the exception of four titles – all other journals failed to meet some criteria of best practice.

Declaration of OA and CC license

When examining the criteria of Declaration of OA and CC license as directly related to the Open Access policy, we found that 69.1% of the journals state the nature of OA and 36.7% of them specified the CC license. By contrast, Shamseer et al observed that 95% of OA journals stated the nature of their OA and 90% of them stated the CC license [12]. This significant discrepancy is certainly caused by different samples of analyzed OA journals and not even a less strict evaluation of both criteria would change it. In fact, according to the OASPA, open access and copyright policies should directly form part of the instructions to authors [9] and therefore all 46 journals from BioMed Central (BMC) received one penalty point for stating the CC license on their respective publishers’ websites instead on their own. When assessing the criteria more moderately and counting BCM journals to those which comply with the criterion of CC license, not 36.7% but 54.1% of journals would meet this criterion. Even so, the results point to the fact that almost half of the journals publishing under an OA model do not inform the authors about their licensing terms. It is in the interest of authors to know the license terms, and to have an overview of how the results of their research may be used by other authors. Therefore, it was surprising to find that the above-mentioned journals Noise & Health and Acta Endocrionologica make it complicated for users to access the full texts of their articles. Especially in the case of Noise & Health it was surprising that, despite the declaration of a CC license, this journal provides access only to full texts in HTML format for free, but access to the PDF version requires payment of a fee.

Peer-review process

Similarly, some journals were not transparent in providing information on the peer-review process, which is otherwise a generally recommended criterion of best practice [27]. However, the results have shown that 40 (15.4%) journals do not provide a description of peer-review, not even by stating a brief notice of double-blind peer-review. In fact, such a notice should guarantee independent evaluation of the articles by reviewers without any intervention from the part of the publisher’s owners [9]. In this connection, we found that 12 out of these 40 journals do not state in their articles the dates from the peer-review process, especially the received date and the accepted date. Therefore, the authors and readers of these journals do not have any information on the progress of the peer-review and its quality. After all, it is not surprising that these 12 journals received a significantly higher average number of penalty points (14) than the average (6) of all the periodicals.

With regard to the criteria of best practice and especially transparency of peer-review, it is necessary to require the publication of the above-mentioned dates of the progress of peer-review. In this era of electronic communication between editors and authors, these dates can be easily recorded and therefore also published in articles. Yet, our analysis showed that 53 (20.5%) journals either do not provide these dates at all, or state them only incompletely (e.g. only the accepted date, or month and year). In fact, a peer-review lasting usually 6 weeks shows the care which both editors and authors devote to the manuscript [28,29,35]. Besides the above-mentioned 53 journals without peer-review dates, we found 18 (6.9%) journals in which the majority of articles underwent a review process shorter than 6 weeks. It was surprising that titles published by prestigious publishing houses or their daughter companies were also among journals with short peer-review or none at all (11 journals from Medknow, Bulletin de L’Académie Nationale de Médecine from Elsevier, World Psychiatry from Wiley, Genome Biology and Evolution from Oxford University Press). One could certainly argue that these findings are based merely on the number of days, that the scientific quality of the examined articles is not reflected, and this quality itself may have sufficed for the editor to accept the article. If that is the case, this should be clearly stated and justified next to the article so that readers and potential authors have no doubts about the quality of the work of both the editor and editorial board. In other words, an open peer-review process with the published assessment of the reviewers and any possible responses from the authors would be completely transparent. Unfortunately, our analysis revealed that from the 165 publishers of the journals analyzed, open peer-review is only performed by BMC (its 46 journals received on average 1.5 penalty points). Open peer-review is not, of course, an automatic guarantee of a journal’s quality, but it serves as an impulse for improving the editorial efforts and, consequently, boosts the professional quality of the journal [11]. At the same time, open peer-review can motivate authors to draw up their manuscripts more thoroughly in order to avoid their reputation being threatened by reviewers’ comments on trivial mistakes in their article. This will of course save editors a lot of work when returning the articles for revision and reviewers will not have to read so many articles with deficiencies. Furthermore, publishers can thus ensure that the author has no doubts about a possible bias on the part of any of the reviewers.

The antiplagiarism policy

Similarly to the progress of peer-review, the anti-plagiarism policy of a journal must be transparent. However, we found that almost half (113) of the analyzed journals do not provide any information about this policy on their websites. The rest of the titles either state that an article will be automatically rejected if it proves a plagiarism or, even better, declare that they use plagiarism detection software. Periodicals from well-known publishers were surprisingly also among those journals with no anti-plagiarism policy (World Psychiatry and Journal of Cachexia, Sarcopenia and Muscle from Wiley, Molecular Therapy from Nature Publishing Group) and 17 journals from the Medknow publisher, whose parent company is Wolters Kluwer. The high percentage of journals without an anti-plagiarism policy is surprising, as there is a risk that publishers create fertile ground for authors to repeatedly publish the same results of their research or unoriginal work.

Editorial boards

In connection with the anti-plagiarism policy, the importance of the professional background of journals represented by their editorial boards emerges. If members of the editorial board are recruited from experts in the respective discipline, then not only will their professional knowledge reduce the risk of publishing a plagiarism, but also the quality of the professional evaluation of delivered articles will be secured. Therefore, journals not revealing the affiliation of their board members poses a problem, because readers and authors cannot unambiguously identify its individual members and be assured of their expertise. While Shamseer et al [12] found complete affiliation in 88% of journals, in our set only 55.2% of periodicals provided this information. From all 259 analyzed journals, nearly a third (83) had an editorial board containing 33% or fewer people from developed countries [30]. Currently, a majority of people from developing countries in an editorial board is a cause of concern about low quality of editorial work due to the lower economic and technological level of development in those countries [5,16,19,29]. Harzing and Adler [16], however, assume that under conditions of globalization, the number of representatives from developing countries in the editorial boards of OA journals will increase over time. This is certainly a correct course for the professional development of editorial board members in the field of scientific publishing, but at the same time publishers from developing countries must make efforts to recruit people from developed countries into their editorial boards. Our findings namely indicate that in the journals which have more than 66% of people from developing countries in their editorial boards, a larger number of violations of publishing rules occur. With the average number of 6 penalty points, journals with the majority of people from developing countries received an average of 10 points, and titles with the majority of people from developed countries received 4 points.

When considering the editorial board, the possibility of contacting the editorial board through the editor-in-chief must be made available to authors [7,9,34,36]. It turned out, however, that 32 (12.4%) journals do not provide this contact information and 51 (19.7%) titles provide only the contact information of their respective publisher. Overall, a third of the journals do not allow potential authors to contact the editorial staff directly. Nevertheless, it is in the interest of the publisher itself to provide contact information for editorial staff, because authors may consult editors on their manuscript directly before sending it in, and in this way they can eliminate the risk of the editorial staff rejecting the article and they can shorten the time between the writing of the article and its publishing by avoiding redundant revisions to the manuscript.

Article processing charges

When examining information on APCs, it became apparent that 115 (44.4%) from the 259 analyzed journals do not mention the amount of APCs at all, or they do not define them clearly by a clear calculation according to the number of pages in the resulting publisher’s version of the article. Although calculation of APCs according to the number of pages is commonly used in OA journals [37], it is an ambiguous procedure, because authors cannot influence the subsequent typesetting of the article and so the price derived in this way. With regard to the fact that ambiguously stated APCs are today connected with predatory journals [16,38], it is in the interest of publishers to define their APCs very clearly so that they don’t risk potential authors interpreting ambiguously provided APCs as a sign of a predatory journal.

Citation metrics and indexation in databases

Violation of best practice in the criteria citation metrics and indexation in databases may also be confusing, especially for authors. Although these criteria are specified only by WAME editors [10,39] and in the case of the citation metrics also in the DOAJ [36], these are the important attributes of a journal for authors. That is because authors are encouraged to publish in journals indexed in WoS and Scopus by their institutions [15,17,40], and they are therefore interested in a journal’s citation metrics and indexing in databases when choosing a journal for publication. Although none of the analyzed journals lied about their indexing in JCR and WoS/Scopus, 23 journals from 21 publishers (twice Medknow) stated untrue information on being indexed in MEDLINE and DOAJ. This may have two causes: either publishers intend to confuse authors and attract them to publish in their journals, or they fail to keep information about their journals up to date. In any event, by failing to meet this criterion publishers harm the reputation of their journals and run the risk that authors will not be interested in their journals. Moreover, some publishers connect their journals with the database Index Copernicus (IC) and its metrics Index Copernicus Value (ICV), which are considered dubious [12,38]. We noted that use of ICV metrics was declared by 8 (3.1%) journals and indexation in the IC by 45 (17.4%) journals (out of which 14 titles are published by Medknow). Although the ICV and IC were allegedly created within a project financed from European Union funds [41], not only can this project not be found in the EU database [42] and its ICI Journal Master List imitates with its name the Master Journal List from WoS [43,44], but it also has a controversial method for computing ICV. According to this method, a journal may reach in the overall evaluation a maximum of 100 points, but the total sum of points that can be obtained in the categories Quality, Stability, Digitization, Internationalization of the journal and Expert assessment is 127 [45]. In spite of these details being publicly available on the IC website, it is surprising that some publishing houses continue to state IC and ICV and risk loss of trust because they have insufficiently verified the source in which they index their journal.

Similarity between journals’ titles

Finally yet importantly, a similarity between journals’ titles may be confusing for authors, because they can mistake a prestigious title complying with criteria of best practice for another one with lower editorial quality. During the analysis, we found that 36 journals have a name similar to that of another title. Nevertheless, at present, imitation of names is interpreted as an effort by a journal to confuse the author and entice him or her to publish in it, and thus as non-compliance with best practice criteria [19,38]. Walt Crawford, however, questions this because in case of highly specialized journals using a completely origIntroduction

At present, research funding in the European Union from the Horizon 2020 programme and public funds in Australia, Canada, Great Britain, and the United States comes on the condition of publishing the research results openly, either through self-archiving or publishing in Open Access (OA) journals [1,2]. This condition is fulfilled especially by publishing in institutional repositories, and a number of authors publish in OA journals with the aim of improving their professional prestige and citation rates [3]. However, with regard to the current problem of predatory journals [4–6] there is a need to choose sucinal name may not be always possible [22]. This fact was also confirmed in our analysis, in which we discovered similarities in the names of journals that have been published for decades (appendix B [47]). With regard to our method of verifying the similarity of journals’ names, our results may be questioned, but on the other hand this is a criterion defined by COPE, DOAJ, OASPA and WAME and the chosen method is the easiest solution for users. We have therefore checked what would happen were this criterion excluded; only the journals Indian Journal of Medical Microbiology, Iranian Journal of Radiology, Korean Circulation Journal would gain less than the average 6 penalty points and the journals Nagoya Journal of Medical Science and Hong Kong Journal of Paediatrics would receive less than half of the possible penalty points (14 or less). In any event, our findings confirmed the necessity of stating an ISSN to ensure unambiguous identification of journals.

Conclusion

The results of the analysis of 259 OA biomedical journals in the JCR have shown that with the exception of 4 journals, all others violate at least one criterion determined by COPE, DOAJ, OASPA and WAME, and our results confirmed previous findings. With regard to findings concerning anti-plagiarism policy, journals’ indexing in databases, and citation metrics, it was proven that not even a well-known publishing house is a guarantee that the criteria of best practice are met. The results also show that the JCR content cannot be used as a whitelist; quite the opposite, it is indispensable that authors, before submitting their manuscript to the editors, verify whether the journal meets the criteria of best practice in scholarly publishing. Such verification should be assisted by librarians, who are information specialists well versed in the field of electronic information sources and identification of technical data on journals. Librarians can not only provide recommended training for authors [5,6], they should also offer a new service for authors, i.e. verification that journals comply with rules of publishing. In performing such an analysis, librarians would of course verify only the formal requirements of a journal and the authors themselves should assess this information together with the professional quality of the journal and decide whether that journal is appropriate for their article.

The administrators of WoS/JCR must proceed more strictly not only when including new journals in their databases but also during repeated checks of journals that are already indexed. For example, DOAJ is aware of this fact and has been re-examining indexed journals since 2014. Because of non-compliance with the publishing rules, hundreds of titles have been excluded from this database [46]. The same attitude towards the content of their databases should be adopted by the administrators of WoS/JCR (as well as by the administrators of Scopus and MEDLINE), if they want to maintain their reputation as reliable sources of information on prestigious scientific journals.

The requirement to improve their work relates also to the publishers of the journals if they want to compete with traditional subscription journals. They must offer some added value, which may be this complete transparency of information about the journal as a guarantee of a professional and objective approach to manuscripts submitted by authors (especially open peer-review, clearly stated APCs and identifiable editorial board members).

Mgr. Jiří Kratochvíl, Ph.D.

University Campus Library, Masaryk University Brno

Doručeno do redakce 18. 6. 2018

Přijato po recenzi 28. 8. 2018

Sources

- Baruch Y, Ghobadian A, Özbilgin M. Open Access – the Wrong Response to a Complex Question: The Case of the Finch Report. Br J Manag 2013; 24 : 147–155. Available on DOI: <http://dx.doi.org/10.1111/1467–8551.12016>.

- European Commission. H2020 Programme: Guidelines to the Rules on Open Access to Scientific Publications and Open Access to Research Data in Horizon 2020 (version 3.2). Brussels: European Commission 2017 [cit. 2017–06–16]. Available on WWW: <http://ec.europa.eu/research/participants/data/ref/h2020/grants_manual/hi/oa_pilot/h2020-hi-oa-pilot-guide_en.pdf>.

- Gargouri Y, Hajjem C, Larivière V et al. Self-selected or mandated, open access increases citation impact for higher quality research. PloS One 2010; 5(10): e13636. Available on DOI: <http://dx.doi.org/10.1371/journal.pone.0013636>.

- Harvey HB, Weinstein DF. Predatory Publishing: An Emerging Threat to the Medical Literature. Acad Med 2017; 92(2): 150–151. Available on DOI: <http://dx.dpo.org/10.1097/ACM.0000000000001521>.

- Kahn M. Sharing your scholarship while avoiding the predators: guidelines for medical physicists interested in open access publishing. Med Phys 2014; 41(7): 070401–070401. Available on DOI: <http://dx.doi.org/10.1118/1.4883836>.

- Nelson N, Huffman J. Predatory Journals in Library Databases: How Much Should We Worry? Ser Libr 2015; 69(2): 169–192. Available on DOI: <https://doi.org/10.1080/0361526X.2015.1080782>.

- COPE. Code of conduct and best practice guidelines for journal editors, version 4. United Kingdom: Committee on Publication Ethics 2011. [cit. 2017–05–22]. Available on WWW: <https://publicationethics.org/files/Code_of_conduct_for_journal_editors_Mar11.pdf>.

- DOAJ. Information for publishers. Directory of Open Access Journals. c2017 [cit. 2017–05–22]. Available on WWW: <https://doaj.org/publishers>.

- OASPA. Membership Criteria. Open Access Scholarly Publishers Association. c2017 [cit. 2017–05–22]. Available on WWW: <https://oaspa.org/membership/membership-criteria/>.

- WAME. WAME Professionalism Code of Conduct. World Association of Medical Editors. 2016 [cit. 2017–12–10]. Available on WWW: <http://www.wame.org/wame-professionalism-code-of-conduct>.

- Wicherts JM. Peer Review Quality and Transparency of the Peer-Review Process in Open Access and Subscription Journals. PLoS One 2016; 11(1). [cit. 2017–05–18]. Available on DOI: <http://dx.plos.org/10.1371/journal.pone.0147913>.

- Shamseer L, Moher D, Maduekwe O et al. Potential predatory and legitimate biomedical journals: can you tell the difference? A cross-sectional comparison. BMC Med. 2017 15(1): 28. [cit. 2017–05–18]. Available on DOI: <http://dx.doi.org/10.1186/s12916–017–0785–9>.

- Danevska L, Spiroski M, Donev D et al. How to Recognize and Avoid Potential, Possible, or Probable Predatory Open-Access Publishers, Standalone, and Hijacked Journals. Pril Makedon Akad Na Nauk Umet Oddelenie Za Med Nauki 2016; 37(2–3): 5–13. Available on DOI: <http://dx.doi.org/10.1515/prilozi-2016–0011>.

- Beall J. Predatory journals and the breakdown of research cultures. Inf Dev 2015; 31(5): 473–476. Available on DOI: <https://doi.org/10.1177/0266666915601421>.

- Bagues M, Sylos-Labini M, Zinovyeva N. A walk on the wild side: an investigation into the quantity and quality of `predatory’ publications in Italian academia. Scuola Superiore Sant’Anna – Institute of Economics: Pisa: 2017. [cit. 2017–05–23]. (LEM Working Paper Series). Available on WWW: <http://www.lem.sssup.it/WPLem/files/2017–01.pdf>.

- Harzing AW, Adler NJ. Disseminating knowledge: from potential to reality – new open-access journals collide with convention. Acad Manag Learn Educ 2016; 15(1): 140–156. Available on DOI: <https://doi.org/10.5465/amle.2013.0373>.

- Yessirkepov M, Nurmashev B, Anartayeva M. A Scopus-Based Analysis of Publication Activity in Kazakhstan from 2010 to 2015: Positive Trends, Concerns, and Possible Solutions. J Korean Med Sci 2015; 30(12): 1915–1919. Available on DOI: <http://dx.doi.org/10.3346/jkms.2015.30.12.1915>.

- Gasparyan AY, Yessirkepov M, Diyanova SN et al. Publishing Ethics and Predatory Practices: A Dilemma for All Stakeholders of Science Communication. J Korean Med Sci 2015; 30(8): 1010–1016. Available on DOI: <http://dx.doi.org/10.3346/jkms.2015.30.8.1010>.

- Ayeni PO, Adetoro N. Growth of predatory open access journals: implication for quality assurance in library and information science research. Libr Hi Tech News 2017; 34(1): 17–22. Available on DOI: <http://dx.doi.org/10.1108/LHTN-10–2016–0046>.

- Somoza-Fernández M, Rodríguez-Gairín JM, Urbano C. Presence of alleged predatory journals in bibliographic databases: Analysis of Beall’s list. El Prof Inf 2016; 25(5): 730–737. Available on WWW: <https://www.academia.edu/29168581/Presence_of_alleged_predatory_journals_in_bibliographic_databases_Analysis_of_Beall_s_list>.

- Macháček V, Srholec M. Predatory journals in Scopus. : IDEA CERGE-EI: Praha 2017. 40 p. [cit. 2017–05–23]. Available on WWW: <http://idea-en.cerge-ei.cz/files/IDEA_Study_2_2017_Predatory_journals_in_Scopus/files/downloads/IDEA_Study_2_2017_Predatory_journals_in_Scopus.pdf>.

- Crawford W. Ethics and Access 1: The Sad Case of Jeffrey Beall. Cites Insights 2014; 14(4): 1–14. Available on WWW: <https://citesandinsights.info/civ14i4.pdf>.

- Bloudoff-Indelicato M. Backlash after Frontiers journals added to list of questionable publishers. Nature 2015; 526(7575): 613. Available on DOI: <http://dx.doi.org/10.1038/526613f>.

- Berger M, Cirasella J. Beyond Beall’s List Better understanding predatory publishers. Coll Res Libr News 2015; 76(3): 132–135.

- Beall J. Don’t Use PubMed as a Journal Whitelist. Scholarly Open Access. 2016 [cit. 2017–05–23]. Available on WWW: <http://web.archive.org/web/20170114052258/https://scholarlyoa.com/2016/10/20/dont-use-pubmed-as-a-journal-whitelist/>.

- Beall J. Best practices for scholarly authors in the age of predatory journals. Ann R Coll Surg Engl 2016; 98(2): 77–79. Available on DOI: <http://dx.doi.org/10.1308/rcsann.2016.0056>.

- COPE, OASPA, DOAJ et al. Principles of Transparency and Best Practice in Scholarly Publishing. United Kingdom: Committee on Publication Ethics 2018 [cit. 2018–03–02]. Available on WWW: <https://publicationethics.org/files/Principles_of_Transparency_and_Best_Practice_in_Scholarly_Publishingv3.pdf>.

- Nguyen VM, Haddaway NR, Gutowsky LFG et al. How long is too long in contemporary peer review? Perspectives from authors publishing in conservation biology journals. PloS One 2015; 10(8): e0132557. Available on DOI: <http://dx.doi.org/10.1371/journal.pone.0132557>.

- Sharman A. Where to publish. Ann R Coll Surg Engl 2015; 97(5): 329–332. Available on DOI: <http://dx.doi.org/10.1308/rcsann.2015.0003>.

- OECD. DAC List of ODA Recipients. OECD: Paris 2016 [cit. 2017–06–07]. Available on WWW: <http://www.oecd.org/dac/stats/daclist.htm>.

- CIEPS. Download ROAD records. ROAD: Directory of Open Access Scholarly Resources. 2017 [cit. 2017–06 - 16]. Available on WWW: <https://www.issn.org/services/online-services/road-the-directory-of-open-access-scholarly-resources/>.

- DOAJ. Frequently Asked Question: How can I get journal metadata from DOAJ? DOAJ: Directory of Open Access Journals. c2017 [cit. 2017–06 - 16]. Available on WWW: <https://doaj.org/faq>.

- OASPA. Members. Open Access Scholarly Publishers Association. c2017 [cit. 2017–12–13]. Available on WWW: <https://oaspa.org/membership/members/>.

- WAME. Principles of Transparency and Best Practice in Scholarly Publishing. World Association of Medical Editors. 2015 [cit. 2017–12–10]. Available on WWW: <http://www.wame.org/about/principles-of-transparency-and-best-practice>.

- Mehrpour S, Khajavi Y. How to spot fake open access journals. Learn Publ 2014; 27(4): 269–274. Available on DOI: <https://doi.org/10.1087/20140405>.

- DOAJ. Information for Publishers. DOAJ: Directory of Open Access Journals. c2017 [cit. 2017–06–16]. Available on WWW: <https://doaj.org/publishers>.

- Björk BC, Solomon D. Pricing principles used by scholarly open access publishers. Learn Publ 2012; 25(2): 132–137. Available on DOI: <https://doi.org/10.1087/20120207>.

- Beall J. Criteria for Determining Predatory Open-Access Publishers. 3rd ed. University of Colorado: Denver 2015 [cit. 2018–04 - 14]. Available on WWW: <https://web.archive.org/web/20170105195017/https://scholarlyoa.files.wordpress.com/2015/01/criteria-2015.pdf>.

- Laine C, Winker MA. Identifying Predatory or Pseudo-Journals. WAME. 2017 [cit. 2017–06–10]. Available on WWW: <http://www.wame.org/about/identifying-predatory-or-pseudo-journals>.

- Carafoli E. Scientific misconduct: the dark side of science. Rendiconti Lincei-Sci Fis E Nat 2015; 26(3): 369–382. Available on DOI: <http://dx.doi.org/10.1007/s12210–015–0415–4>.

- Index Copernicus International. Centrum Badawczo Rozwojowe EN. Index Copernicus. 2017 [cit. 2018–01–23]. Available on WWW: <http://www.indexcopernicus.com/index.php/en/168-uncategorised-3/509-centrum-badawczo-rozwojowe-en>.

- European Commission. Projects. European Commission: Regional Policy: InfoRegio. 2017 [cit 2017–06 - 16]. Available on WWW: <http://ec.europa.eu/regional_policy/en/projects>.

- Index Copernicus. ICI Journals Master List. Index Copernicus International. 2017 [cit. 2017–06–16]. Available on WWW: <http://www.indexcopernicus.com/index.php/en/parametryzacja-menu-2/journals-master-list-2>.

- Clarivate Analytics. Journal Search: Master Journal List. Clarivate Analytics. c2017 [cit. 2017–06–16]. Available on WWW: <http://ip-science.thomsonreuters.com/cgi-bin/jrnlst/jloptions.cgi?PC=master>.

- Index Copernicus. Evaluation methodology. Index Copernicus International. 2017 [cit. 2017–06 - 16]. Available on WWW: <http://www.indexcopernicus.com/index.php/en/parametrisation-1/journals-master-list-2/the-methodology-en>.

- Marchitelli A, Galimberti P, Bollini A et al. Improvement of editorial quality of journals indexed in DOAJ: a data analysis. Ital J Libr Inf Sci 2017; 8(1): 1–21. Available on DOI: <http://dx.doi.org/10.4403/jlis.it-12052>.

- Appendixes available on WWW: <https://is.muni.cz/repo/1527916/>.

Labels

Diabetology Endocrinology Internal medicine

Article was published inInternal Medicine

2019 Issue 5-

All articles in this issue

- Limited ambulatory night sleep testing in patients with a suspicion of sleep apnoea syndrome: Is its indication tenable?

- Surgical treatment of chronic thromboembolic pulmonary hypertension

- Communication with cancer patient

- Multimodal treatment of thymic carcinoid: a case report

- Nontuberculous mycobacterial disease: a case report-based review

- Use of idarucizumab in clinical practice: a case report

- Otto Kahler and his family: II. Ripening years in Prague

- Compliance with ethical rules for scientific publishing in biomedical Open Access journals indexed in Journal Citation Reports

- Internal Medicine

- Journal archive

- Current issue

- Online only

- About the journal

Most read in this issue- Communication with cancer patient

- Surgical treatment of chronic thromboembolic pulmonary hypertension

- Limited ambulatory night sleep testing in patients with a suspicion of sleep apnoea syndrome: Is its indication tenable?

- Nontuberculous mycobacterial disease: a case report-based review

Login#ADS_BOTTOM_SCRIPTS#Forgotten passwordEnter the email address that you registered with. We will send you instructions on how to set a new password.

- Career